Note: You can find this analysis updated for 2020 here.

Dev fell in love with code at a young age.

He graduated from college with an engineering degree and then joined the Navy as a cyber warfare engineer. Pressured by his highly educated Persian parents, Dev returned to school after military service to complete a master's in computer science. He has spent his entire career since in software development, mostly coding in C++.

He realized early on that workloads were moving to the cloud and spent years retooling and upgrading his skill set, familiarizing himself with each of the major cloud vendors. He championed his team's transition from an on-premise Oracle database to a next-gen database hosted in the public cloud.

Always on the cutting edge, Dev regularly attends employer-sponsored training sessions and hackathons.

Dev worked with all of the major programming methodologies over the years but eventually settled on Agile, becoming a big fan.

Now a manager at a large, 15,000+ employee technology giant, he takes time to work out 3-4 times per week at the free company gym. Even after completing an agile sprint he keeps moving - jogging home after work most days.

After promotion, Dev gave his second monitor to one of his younger direct reports. He wasn't spending as many hours furiously coding as he used to, so the extra real estate felt unnecessary.

A big proponent of healthy work habits, last year he procured a full set of standing desks for his entire team and required that all team members eat lunch away from their workstations. Rarely rushed himself, he never skips meals.

During the week, Dev wakes up just after 11am. Every. Single. Day.

Must be nice right?

However, at 50 years of age, Dev worries if his career has already peaked. He has no plans to retire any time soon, but whispers of ageism in tech and seeing his older colleagues being laid off have him wondering if he's next.

That said, he knows he could make up lost income working independently as a freelancer. He has a hunch he'd make even more running solo.

A proud, loving father, Dev hopes his daughter follows in his footsteps. He sees a bright, better future for her. As far as he's concerned, the world is her oyster.

Who is this guy?

If it's not clear already, Dev's not a real person.

But his personal and professional characteristics really do correlate with higher income.

Age. Gender. Race.

Education. Professional experience. Programming languages.

Methodology. Hackathons. Even how many monitors he uses.

The traits that correspond with higher pay are not always what we would expect - nor necessarily what we'd hope.

The economics of developer pay is an important topic for me. Developers are the key input in the production of software. You cannot understand tech unless you understand how developers get paid—you just can't.

However, the publicly available analysis of this important issue is quite poor.

The problem with most pay analyses

As covered in my last post, Stack Overflow conducts an annual survey of software developers, asking about various aspects of their careers, like income, job title, etc.

One disappointing feature of most pay analyses is that they show the data without controlling for any other variables. Avoiding any math more complex than simple averages, the typical analyses of pay have no way to understand a multivariate world.

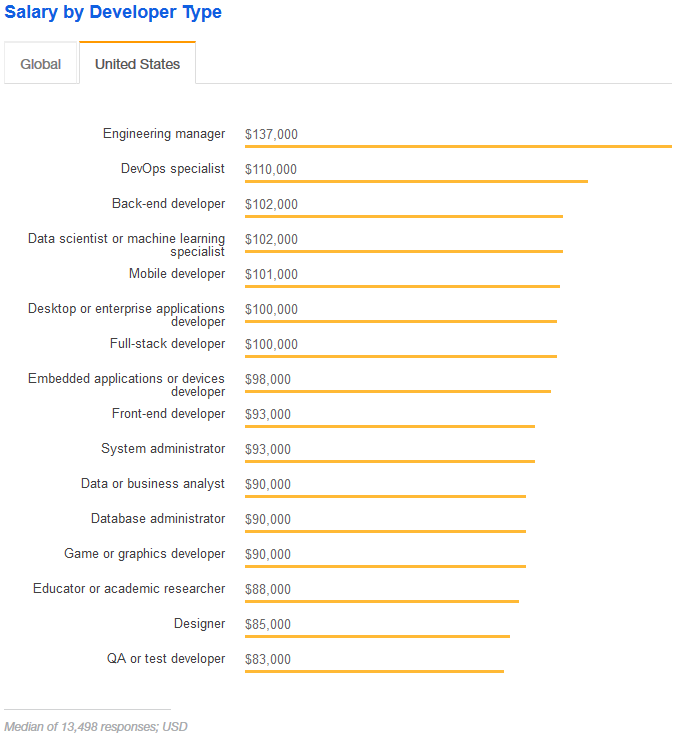

Stack Overflow’s own write-up of the survey results does this at times, taking the simple average of income across all full-stack developers, for example, and comparing the same metric for DevOps specialists:

Statements like "Engineering managers, DevOps specialists, and data scientists command the highest salaries” are then made, which, though technically true, tend to mislead readers who are not well-versed in statistics into thinking that these are ceteris paribus (all things equal) comparisons ("X makes more than Y, all else equal"), which they are not.

To their credit, Stack Overflow at least takes the additional step of comparing these salaries against years of professional experience, but that is just one of many possible controls. In fact, the entire survey provides a rich set of potential controls to “hold equal” and thereby generate more intuitively accurate statements about potential relationships (“X developers make more than Ys who are otherwise similar”, which is a qualitatively and likely quantitatively different statement than “Xs make more than Ys").

Unfortunately, most public discussion of pay does not control for even a single variable. This isn’t lying with statistics so much as it is misleading with them.

Said simply—we don’t only want to know “how much more or less do 45-year-old developers make compared to 25-year-old developers.” More importantly:

“How much does a 45-year-old developer earn relative to a 25-year-old developer who is otherwise equivalent (in skills, experience, age, company size, country etc.)?”

Both are important questions, but to me, the latter is much more interesting and intuitive. It also happens to be much more difficult to answer—hence it is rarely attempted.

This is an attempt do better. Not perfect, but much better.

Results

I break down the results by survey question, with a chart displaying the controlled effect of each trait on income, in addition to 95% confidence intervals. Correspondingly, any references to statistical significance represent p-values < 0.05.

The results represent a subset of approximately 11,000 U.S-based developers from the Stack Overflow survey.

If you’d like a nicely formatted report with the full set of results, enter your email below and you’ll receive an email with a download link when ready.

Receive a report with the full results

For more detail on the methodology, please see the appendix at the end.

Demographics

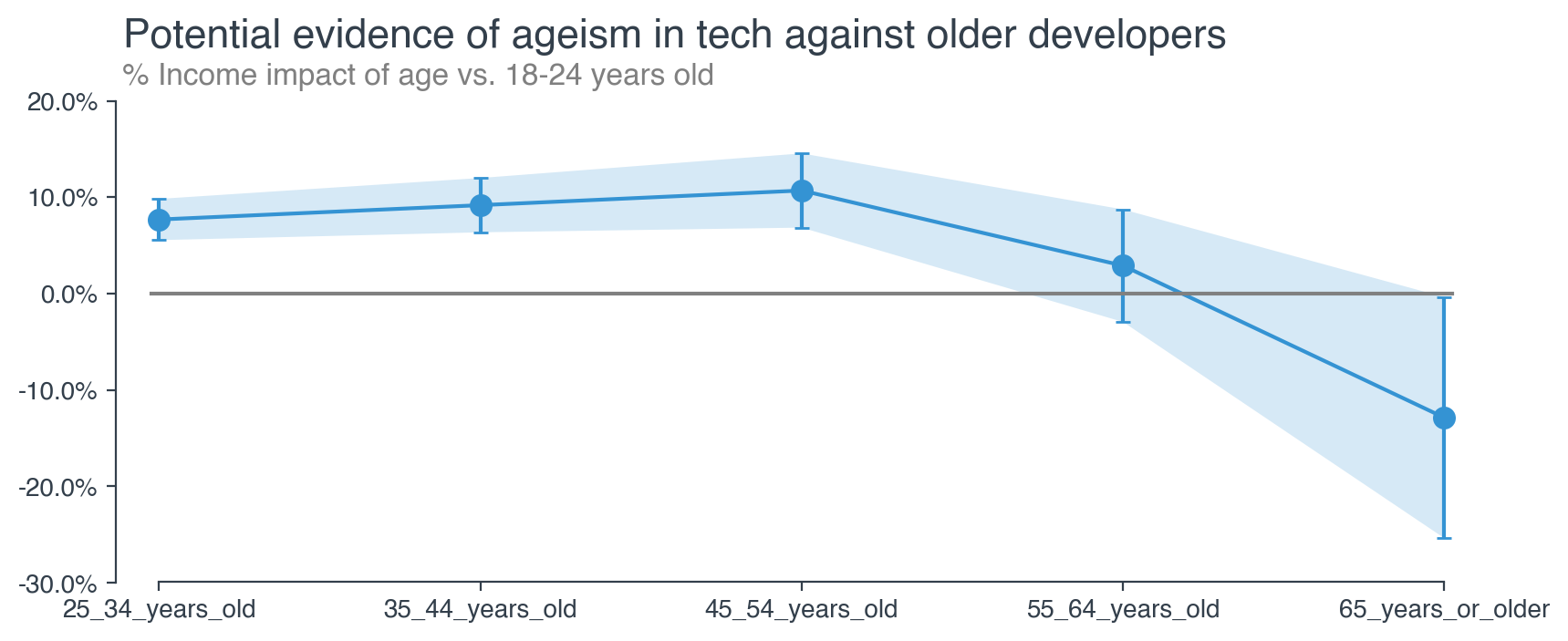

Initially, age increases earnings. That said, the annualized compound gain is quite meager—about 0.2% through 45-54 years of age—with most of that happening before a developer turns 25.

More interesting is how the impact of age declines and turns negative (relative to a developer of 18-24 years of age) as a developer approaches 65:

- At this level of precision, I cannot distinguish the pay of a developer 55 or older from an otherwise similar one younger than 25

- I can say with reasonable certainty that a developer over the age of 65 makes less than one of 18-25 years of age who is otherwise similar.

Ageism could be playing a role. Tech companies, especially startups, are perceived to have a preference for younger employees. Evidence of ageism is often anecdotal, but this data is at least suggestive that there may be something real behind this concern.

Unfortunately, ageism is notoriously difficult to prove or disprove, exactly because it is exceedingly rare to find a 25-year-old who is, in every way other than age, the same as a 65-year-old.

- For starters, a 25-year-old developer cannot possibly have much more than 5 years of professional development experience, while a 65-year-old developer almost certainly does. And that is just one variable.

This is certainly an issue worth exploring further.

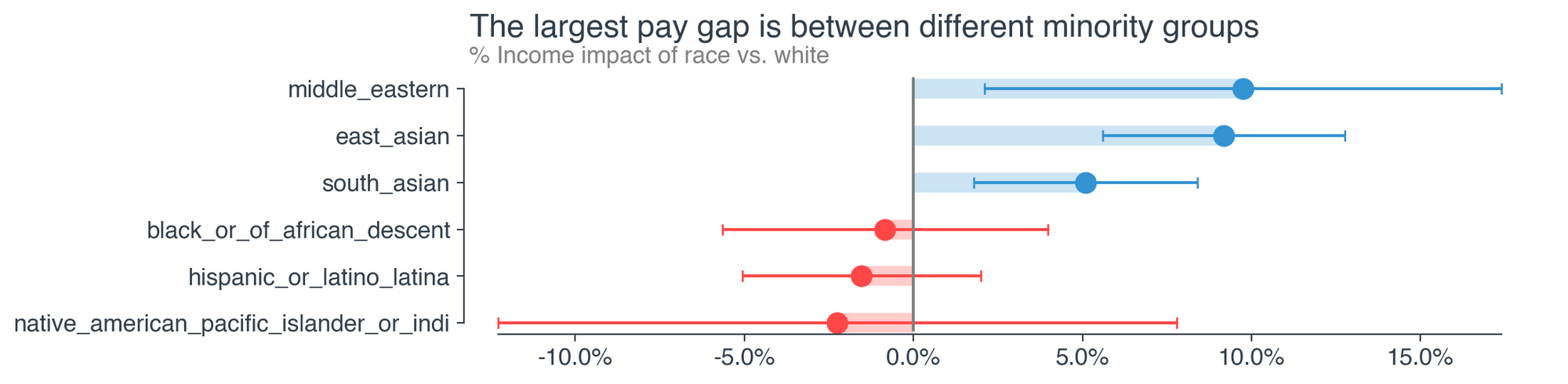

Asian and middle eastern developers are paid much more than similar white developers, and the pay premium is likely larger than the pay discount faced by other minorities.

Black developers appear to earn 0.8% less than white developers with similar traits, though with a wide enough confidence interval to lose statistical significance.

- The lack of precision (plus or minus 5%) is frustrating but inevitable given the low proportion of black software developers in the population and the dataset

- Note: Only 1.5% of developers in my dataset are black (not significantly different than the overall developer population)

Hispanic or latino/a developers earn 1.5% less than similar whites. Again, we see reasonably large confidence intervals due to lack of sufficient data.

That there is such a large pay premium for asian and middle eastern developers is an interesting factoid in itself and one that warrants further exploration

- The estimates range from 5% to 10%, and all are statistically significant.

The size of these effects are especially impressive given the small proportion of the dataset these minority groups respectively represent:

- East Asian—2.8%

- Middle Eastern—0.6%

- South Asian—3.6%

That they are statistically significant even with few data points suggests these effects are quite real.

Pay discrimination is a serious issue that warrants rigorous analysis, much more than what I’ve done here.

- All in all, the results suggest that attempts to level the “paying” field should also focus on equalizing pay across various racial minority groups, not simply between minority groups and the majority

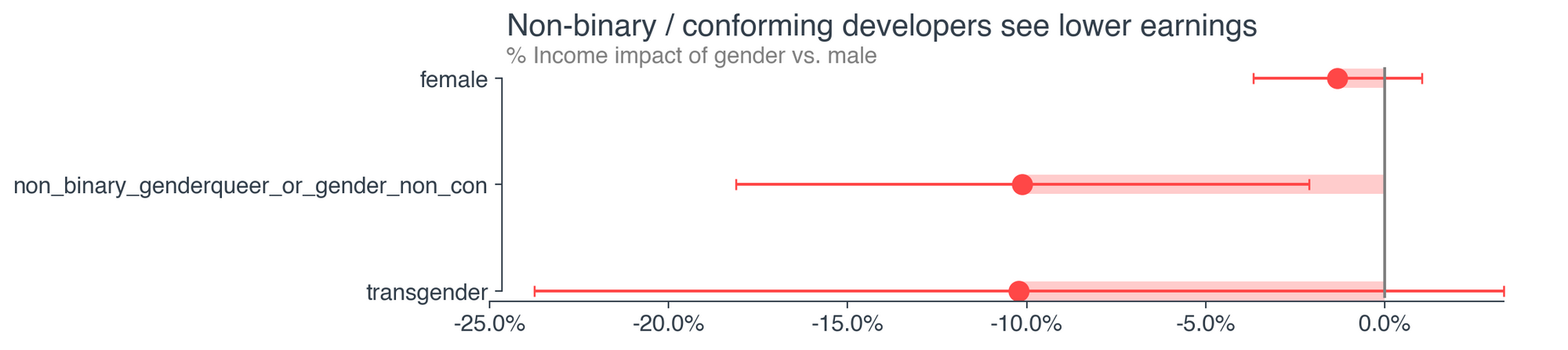

The pay gap for female software developers is similar in magnitude and low statistical significance to that of black developers—roughly 1.3% plus or minus 2.4%.

Gender non-binary / non-conforming developers face a large pay discount of 10% relative to male developers.

Given very few observations in the dataset, the pay effect for transgender developers cannot be estimated precisely enough to conclude anything meaningful.

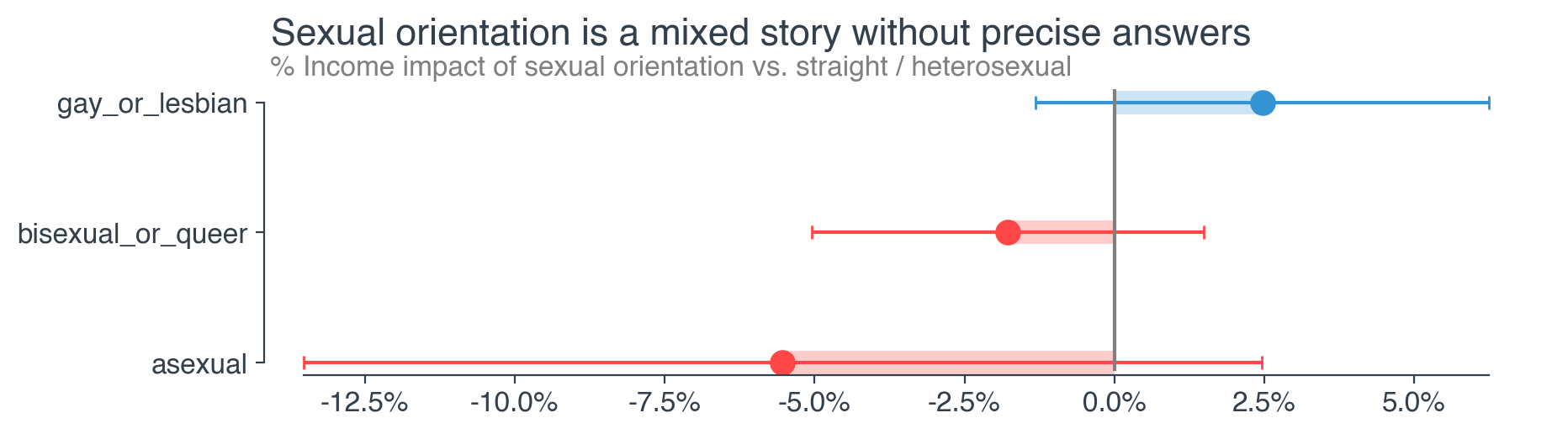

Gay and lesbian software developers appear to make 2.5% more than straight / heterosexual developers, but this estimate is not statistically significant.

In fact, given the confidence intervals above, none of the categories of sexual orientation are statistically significantly different from heterosexual.

- Again, this is in part due to limited data—only 2.4% of developers in the sample are gay or lesbian, for example

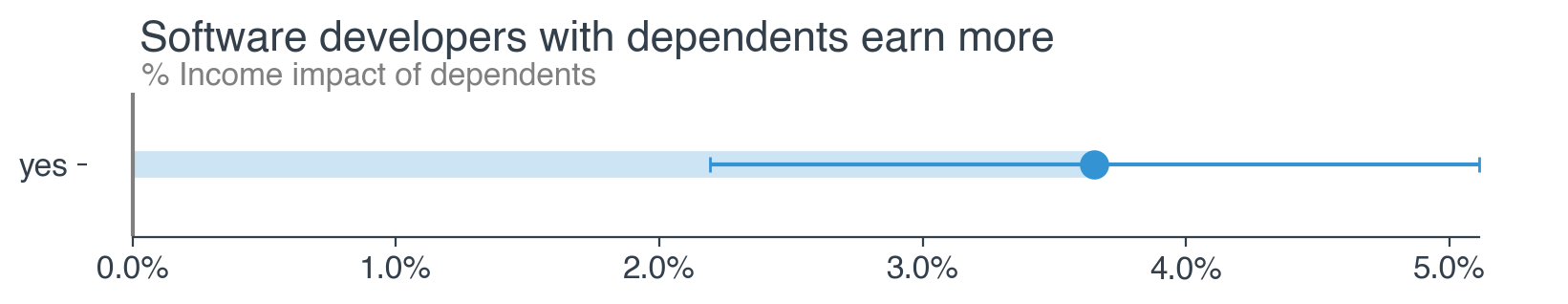

Good news for parents—developers with dependents (children, grandparents etc.) earn 3.7% more than those who don’t, controlling for other factors.

There are a number of potential explanations for this

- Workers with dependents likely want / need the extra income and hence seek out jobs that pay more

- I do not have evidence to conclude that employers are specifically choosing to pay those with dependents more than others, though this could also be the case

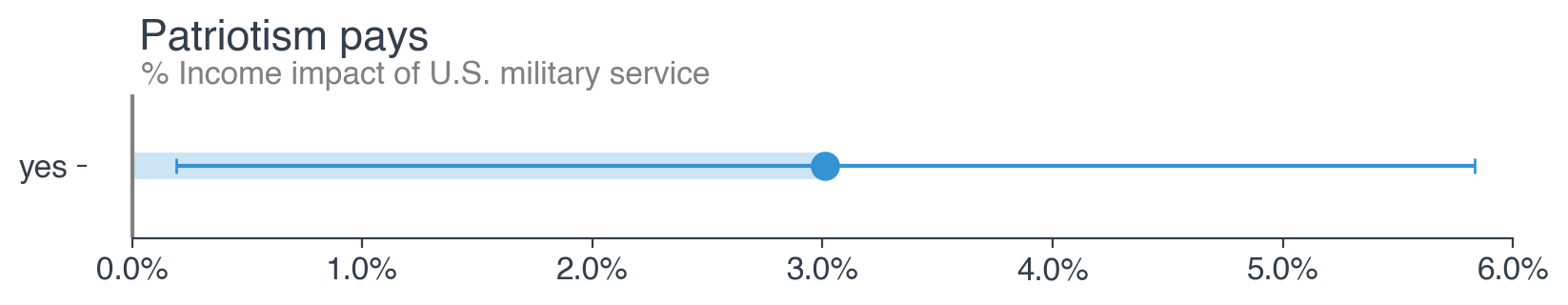

Former and current U.S. military service members earn 3% more than those without prior military service.

As with other traits, there are any number of potential drivers of the veteran pay premium, and it's inherently difficult to pinpoint the most likely explanation.

Takeaways

- Drawing justifiable conclusions around the impact of demographic characteristics on income is necessarily tricky given the highly imbalanced nature of the developer population

- Caution is advisable when positing causal connections, and these should ideally be accompanied by some theoretical mechanism

- That said, the results are interesting and should hopefully serve as a starting point for deeper and more rigorous analysis.

Education

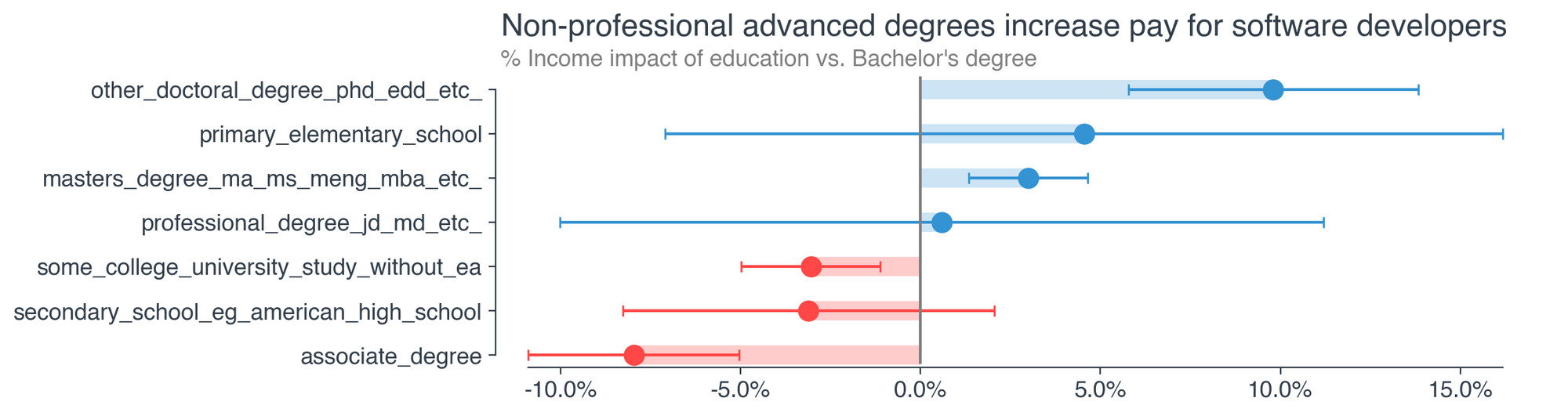

Advanced, non-professional degrees drive higher earnings. A masters or doctoral degree (PhD etc.) drives statistically significant gains of 3% and 10%, respectively, relative to an equivalent developer with only a bachelor’s.

Going to college but not completing is associated with a 3% cut in earnings, while graduating with an associate degree decreases earning by about 8% vs. a bachelor’s. Again both are statistically significant.

The effect of professional degrees like JDs and MDs is not statistically significant due to wide confidence intervals.

- Very few people with these degrees are working as software developers, so establishing a precise estimate is difficult.

Not much can be said for developers that never reached the college level—due to lack of data, the confidence intervals are too wide to draw meaningful conclusions. However, these folks are almost certainly worse off than someone with an advanced degree.

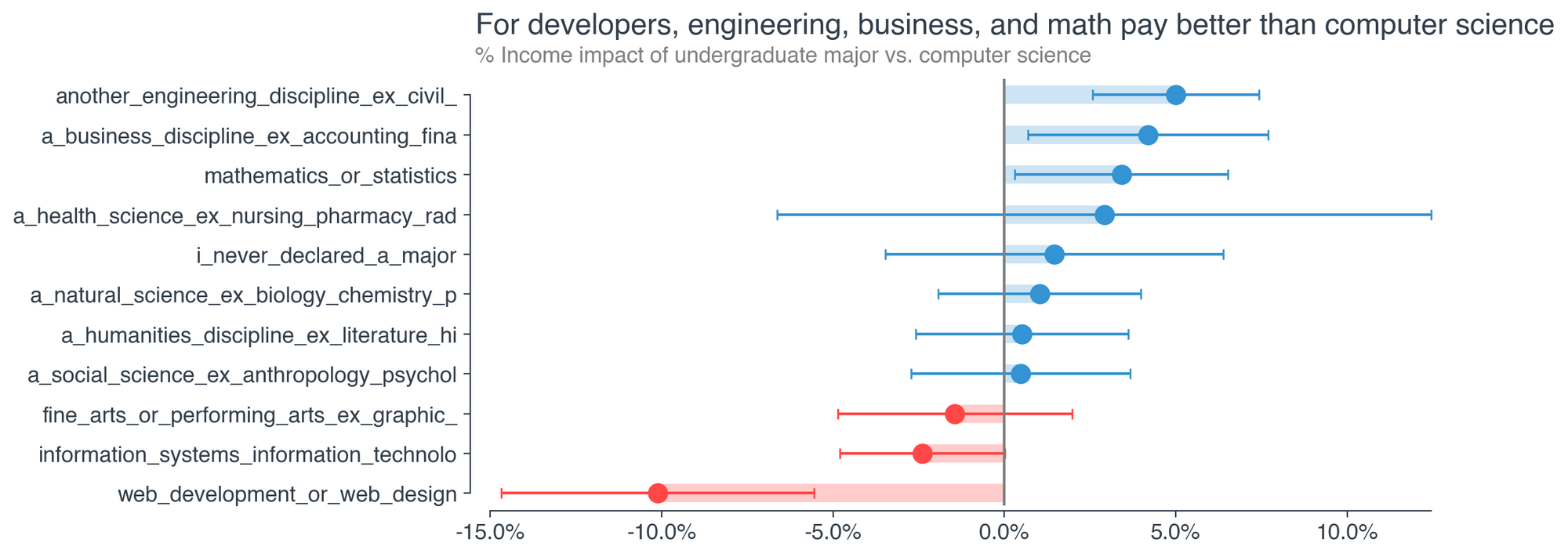

Interestingly, computer science is not the best-paid college major.

- In fact, other engineering disciplines (civil, mechanical, electrical, etc.) (5% increase), business degrees (accounting, finance, etc.) (4% increase), and math / stats majors (3.4% increase) all earn more, all else equal.

Outside of these areas, college major is largely irrelevant to developer pay. The meme of the underpaid, over-educated sociology major doesn’t apply.

- This could be encouraging in that it shows you can be paid for good dev work regardless of your field of study

- With dev schools and bootcamps like Lambda popping up all over, this is great news if you are considering a career change and worried you might be at a disadvantage.

- On the other hand, it does raise the question—why aren’t developers who studied the most apparently relevant field paid more than all others?

- Does this say anything about undergraduate computer science teaching, which is often criticized for being irrelevant and out of touch with professional software development work out in the wild?

The exceptions are information systems / technology and web development / design degrees, which bear a pay discount of 2.4% and 10% respectively.

- Why? My take is that these degrees are often associated with private, for-profit schools not often known for their quality

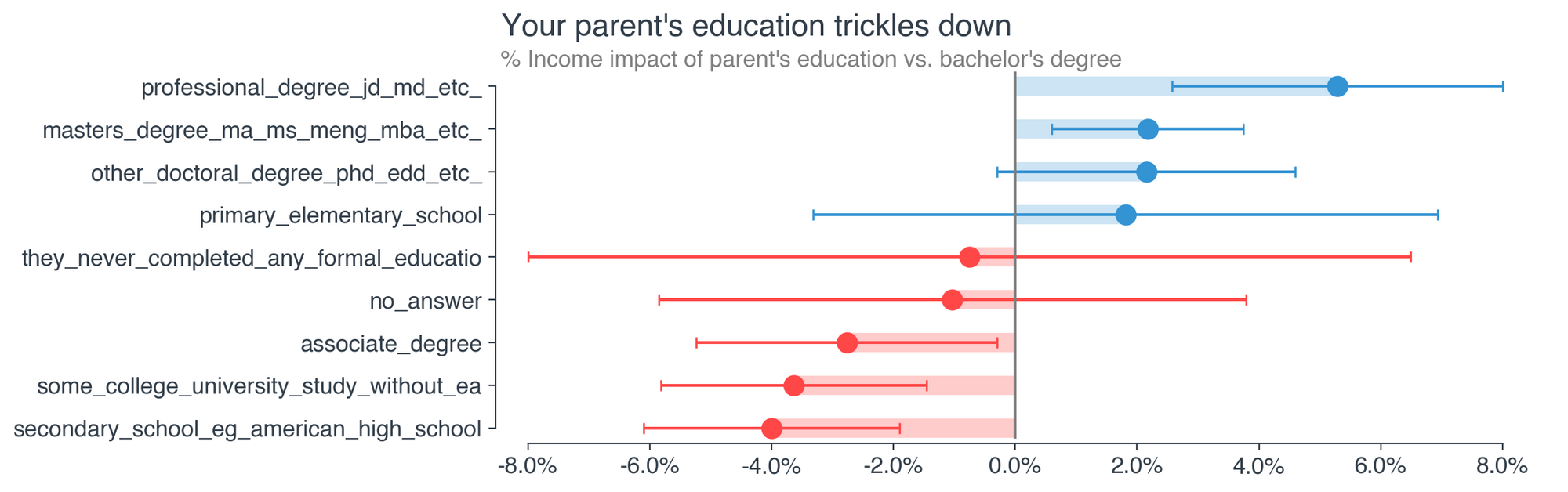

Your parent’s level of education matters as a software developer.

Developers with at least one parent holding a professional degree like a JD or MD will earn 5.3% more than a developer whose parents never earned more than a bachelor’s degree. Masters and doctoral degrees among parents are associated with a 2.2% increase in pay for the children, though this relationship narrowly misses statistical significance for doctoral degrees.

Associate degrees, some college, and high school all have significantly negative impacts of 2.8-4%.

Primary school and no formal education were too rare in the dataset to generate precise effect estimates.

It’s interesting to consider how these effects manifest themselves throughout the professional life of a developer

- We can all imagine ways in which parental education could have meaningful follow-on effects in the lives of children

- It is also possible that, with more controls, these effects would dissipate or lose significance

- For example, the effect may channel itself through income or other variables that correlate with (or are influenced by) education, rather than schooling itself

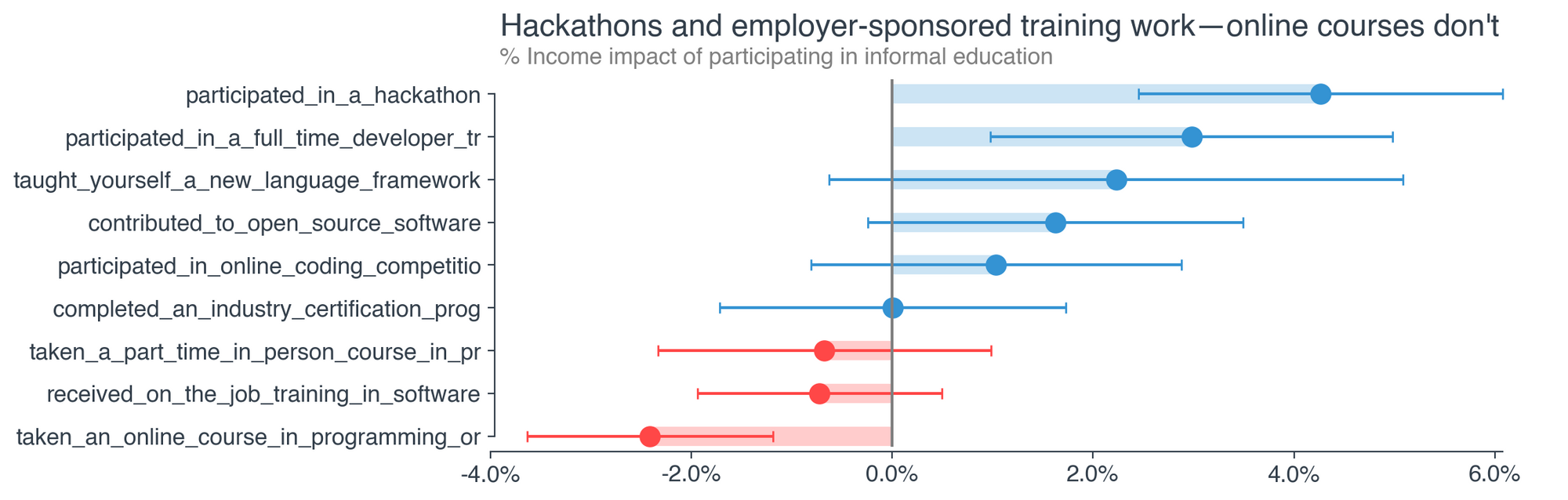

Participation in hackathons is clearly associated with higher pay—more than 4.3% higher, as are full-time developer training programs (bootcamps), which provide a 3% bump.

We’ve witnessed an explosion in the number of coding bootcamps over the past decade. The pay bump is equivalent in magnitude to a master’s degree, which is incredible given bootcamps take months to complete, not years, and are generally cheaper in tuition than an advanced degree.

Contributing to open source software, or OSS (certainly an educational experience, in a sense), has a positive but not statistically significant bump of 1.6%

- Open-source penetration of the typical software stack has only increased over the years—it would make sense if active contribution to OSS is rewarded with higher pay.

Industry certifications get a big fat zero. No effect.

On the other hand, online programming courses, MOOCs, etc. are associated with a significant drop of slightly more than 2.4%. Given the proliferation of online software development courses over the years, this is an inconvenient finding.

- That all said, I do worry about potentially confounding or omitted variables that have not been controlled for

- I can’t imagine a strong causal link between taking an online course and lower pay, but I can certainly imagine that the "type of person” who takes an online course might also be the type of person that generally earns less

Likewise, the type of person to participate in a hackathon likely has other, unobserved traits that drive higher income

- Certain companies, which may be higher paying on average, also host hackathons in their offices

In other words, correlation may not be causation here, even after controlling for many variables.

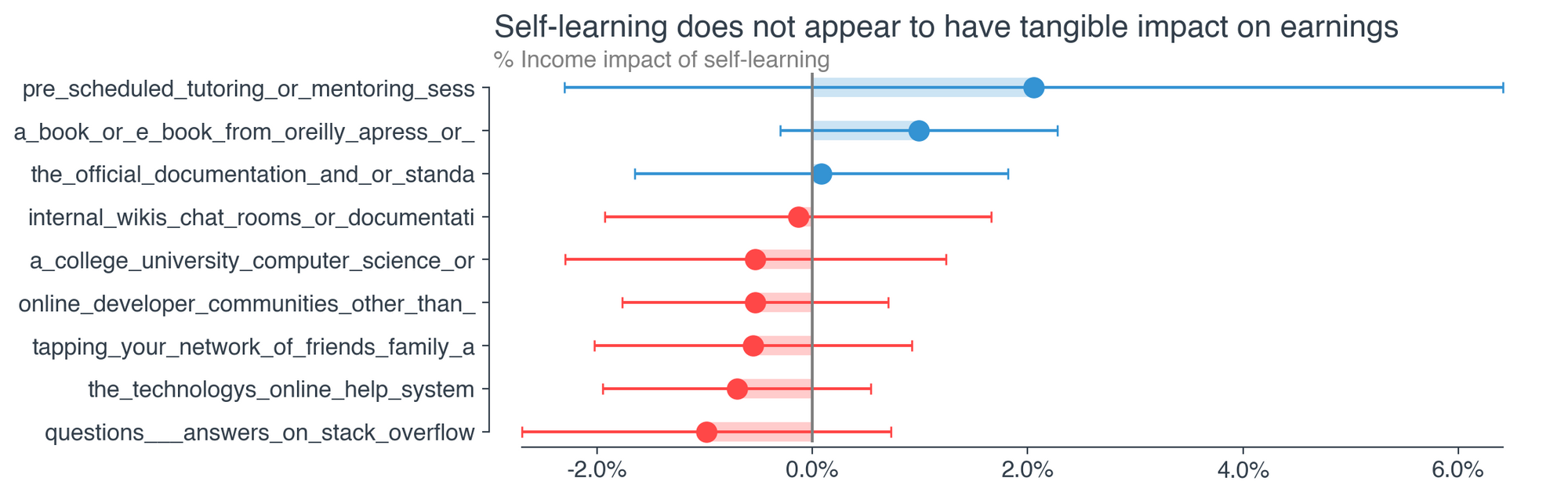

As an avid self-learner myself, I am disappointed to see that no form of self-learning seems to have a statistically discernible impact on earnings.

- Books and e-books were close to achieving statistical significance, but even so, the point estimate is only 1%.

Self-learning is a great way to, in targeted fashion, learn exactly what you need to know about a certain topic or area of knowledge. For many, it is more effective than traditional teaching methods.

However, it doesn’t seem to impact pay in and of itself.

Takeaways

- Education matters, largely in the ways one would guess

- If you’ve already graduated from college, advanced degrees, hackathons, bootcamps, and open source projects can be smart ways to increase your value as a developer in ways that show up in your paycheck

- If you haven’t graduated yet, know that the exact major you pick matters less than showing interest or commitment to the craft, at least when it comes to pay

Professional Characteristics

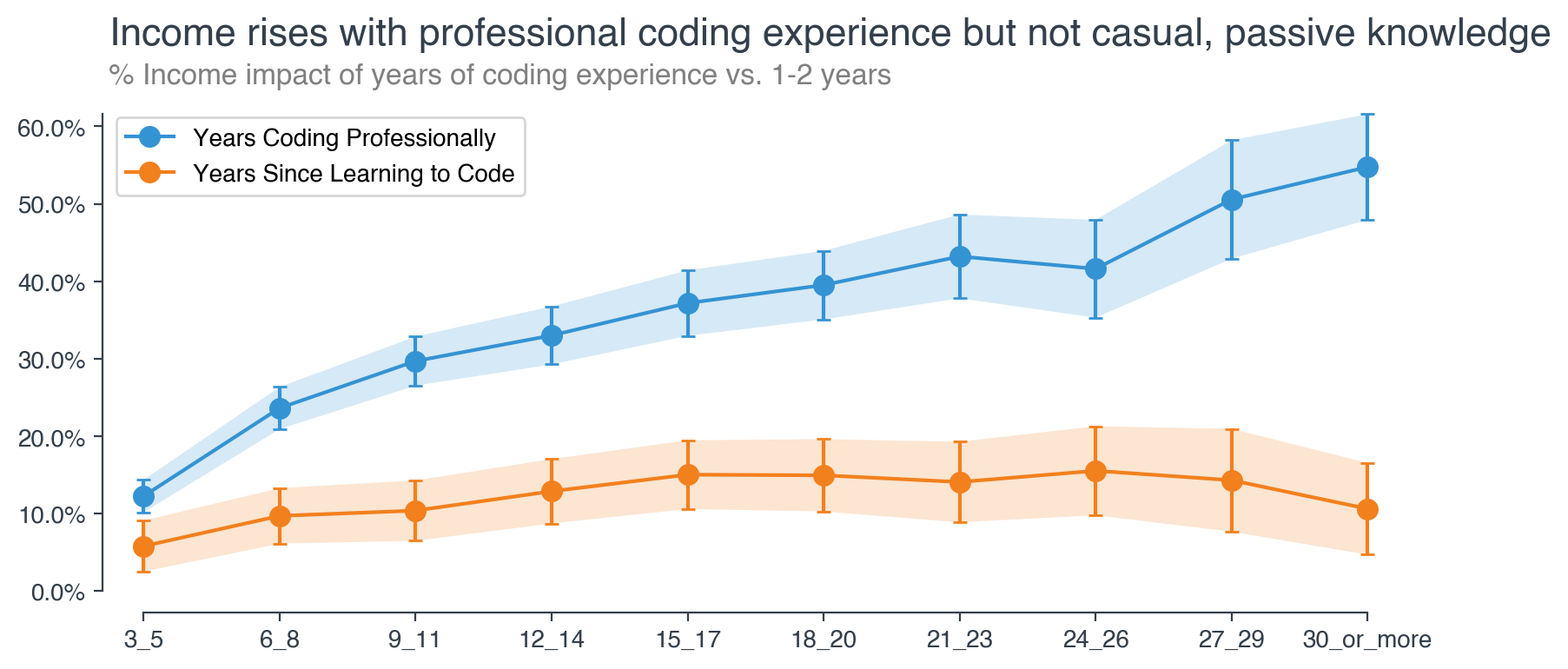

Professional development experience matters much more than casual coding. The gains from more years of general coding experience tend to plateau after 15 years, while professional coding experience continues to pay dividends well into the 30 year range.

The benefit from experience with casual coding (relative to 0-2 years) maxes out at 10-15% and actually begins to decline after 26 years.

- Again, this is holding other factors equal, including professional experience, so this may make sense

- Someone who learned to code 30 years ago and has little more to show for it than someone with only 10 years of coding experience might raise flags.

- We must be careful however—taking the year one learned to code as given, years since learning to code correlates perfectly with age, suggesting that ageism may be leaking in here. I explore age specifically elsewhere in this post.

The fastest gains from professional experience come in the first ten years.

- A developer with 9-11 years of professional experience can expect to earn 30% more than a newbie, all else equal, which translates to about 2.5 percent year-over-year gains.

- Of course, “all else” is likely not equal for any given developer across a ten-year span, suggesting even greater potential annual raises for a given developer in the real world.

After the first decade, the line is nearly straight, aside from a mid-career slump at the 25-year mark.

- Do not be fooled, however—constant absolute gains in fact represent declining growth

- In other words, each year of professional development experience provides a nearly-constant dollar raise but a declining percentage raise. I explore this phenomenon further in a upcoming post

With that caveat, it is still encouraging to see that developer pay does not fully plateau or reverse course with additional professional experience over time.

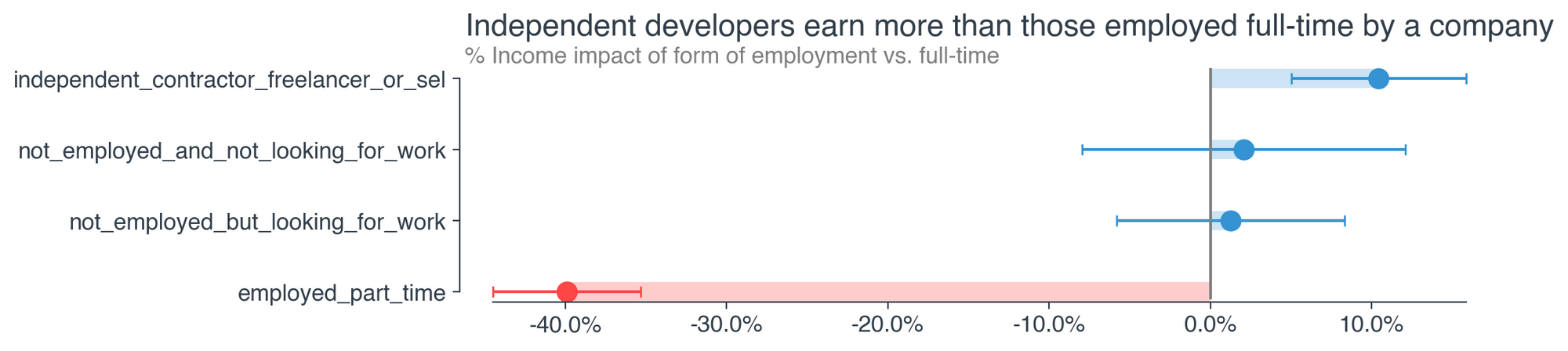

No surprise here—working part-time does not help your earnings.

More interestingly, developers working as freelancers or independent contractors make more than those employed full-time

- This may be compensated for by what is almost surely more variable income

- In general, stable, full-time employment does come at a cost, not only in software development but in many other areas of the economy

Reverse causation could also be at play here—someone who knows they could make more as a freelancer is exactly the kind of person who would become one.

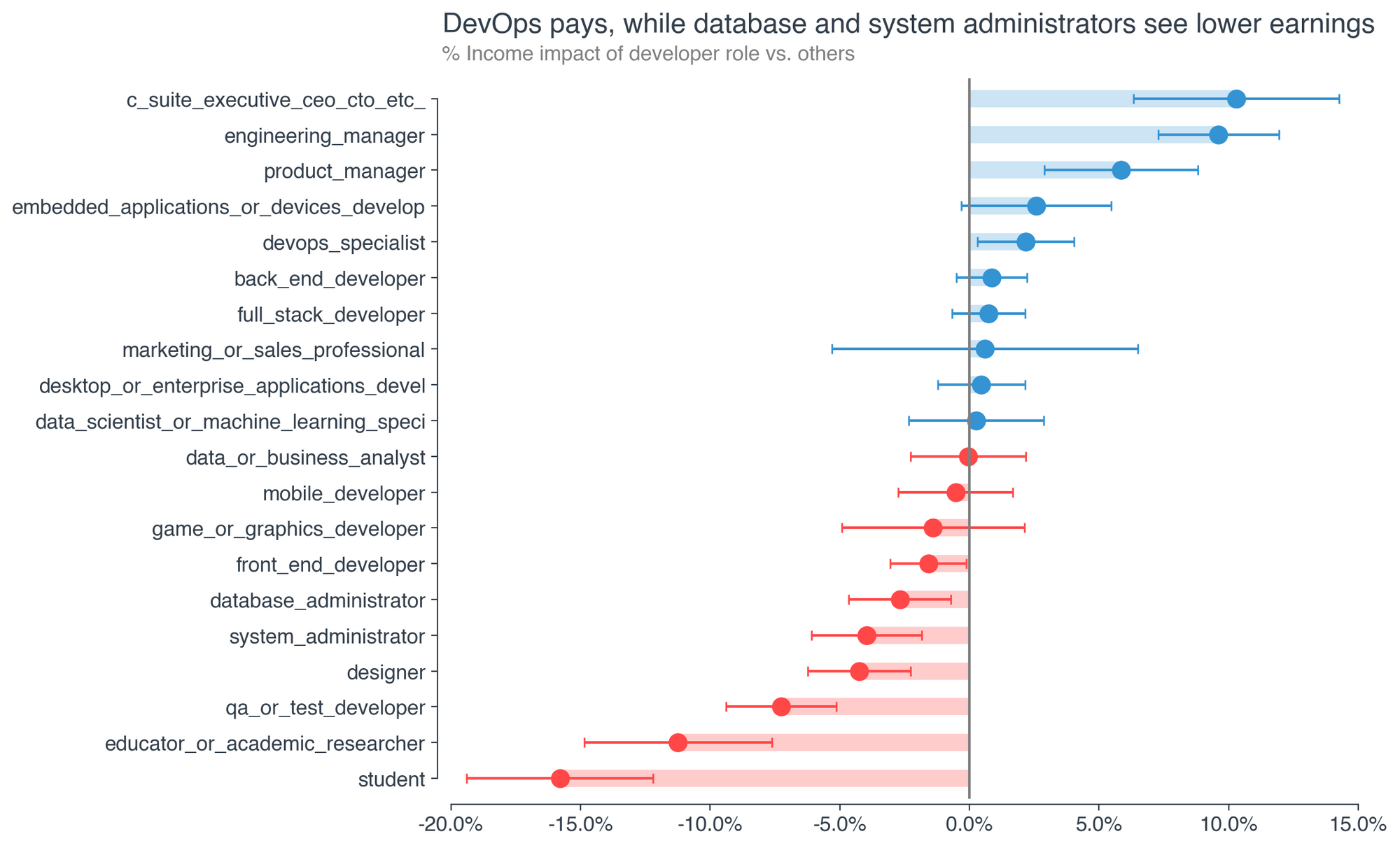

It’s no surprise that managers and executives earn more. This includes product managers, who might not write significant amounts of code themselves.

- Engineering managers and C-suite execs make 10% more, while product managers make 5.8% more, all else equal.

The only non-manager role that makes meaningfully more than its counterparts is DevOps:

- DevOps specialists are about 2.2 percentage points higher on the pay scale than the non-DevOps average for similar developers.

Conversely, there are a number of roles that make noticeably less despite similar levels of experience, education etc.

- Database admins (DBAs), sysadmins, designers, QA / testing developers make 2.5-7.5% less than developers who don’t fall under these categories

- With the exception of designers, these roles are commonly seen as the “back office” of IT, though one could easily find counter examples depending on the specific team or organization

- Perhaps needless to say—academics and students earn meaningfully less than those outside of academia

Takeaways

- Professional experience matters and never stops mattering

- Casual development experience helps too—but only so much, measured both by the amount of experience and the earnings impact

- Among those working in industry, role matters positively for managers and DevOps professionals and negatively for system and database administrators, as well as designers

Languages, Frameworks, Databases, Platforms, and Tools

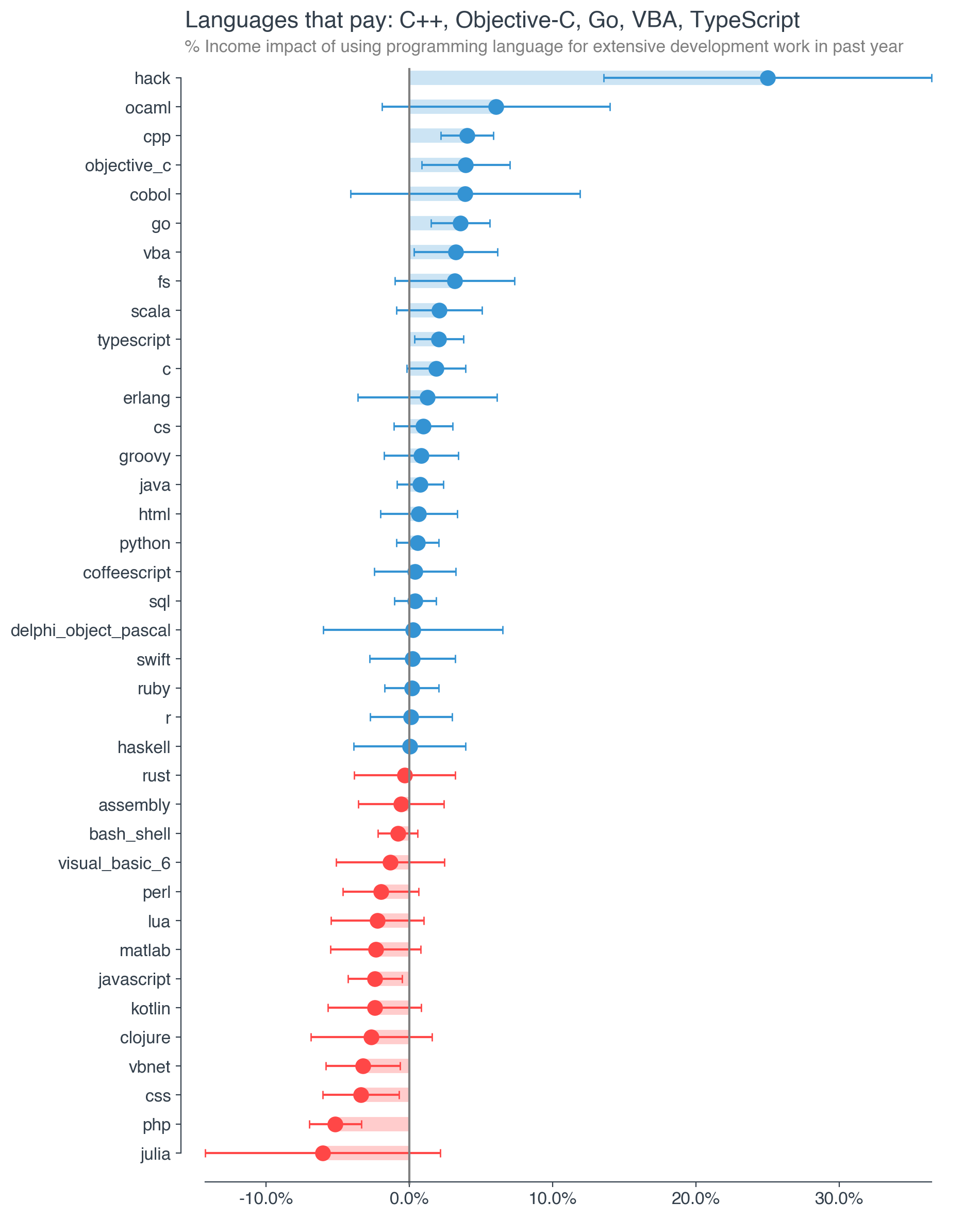

The main implication of these results—languages largely don’t matter to pay. The vast majority of languages do not provide a statistically significant pay bump or discount relative to jobs that don’t require that language. The pay scale for programming languages is quite flat across the universe of languages.

Hack is a clear outlier—working with Hack yields a 25% bump. However, don’t drop everything to go learn Hack right now

- Hack is not a widely used language

- It was developed by Facebook, and unlike other languages and frameworks that come out of the big tech giants, Hack has not had the same marketing push placed behind it.

- The effect we see here likely reflects developers who work at Facebook itself—there’s no sign of a robust hiring market for Hack talent.

Outside of Hack, on the positive side we find Objective-C, C++, Go, VBA, and TypeScript with statistically significant income effects, ranging from 2.1 to 3.9 percent.

On the negative side we have JavaScript, VB.NET, CSS, and PHP

- JS, CSS, and PHP likely reflect the lower pay of certain web development jobs

- These languages represent fundamental technologies of web development

- For that same reason however, they are considered table stakes

- Hence, using these technologies on the job doesn’t provide much of a pay bump, though you may be quite employable

Please note that the results above are not saying “knowing language X increases / decreases pay.” Knowing a language is not likely to ever harm one’s pay, but working a dev job that uses it might (relative to other development work).

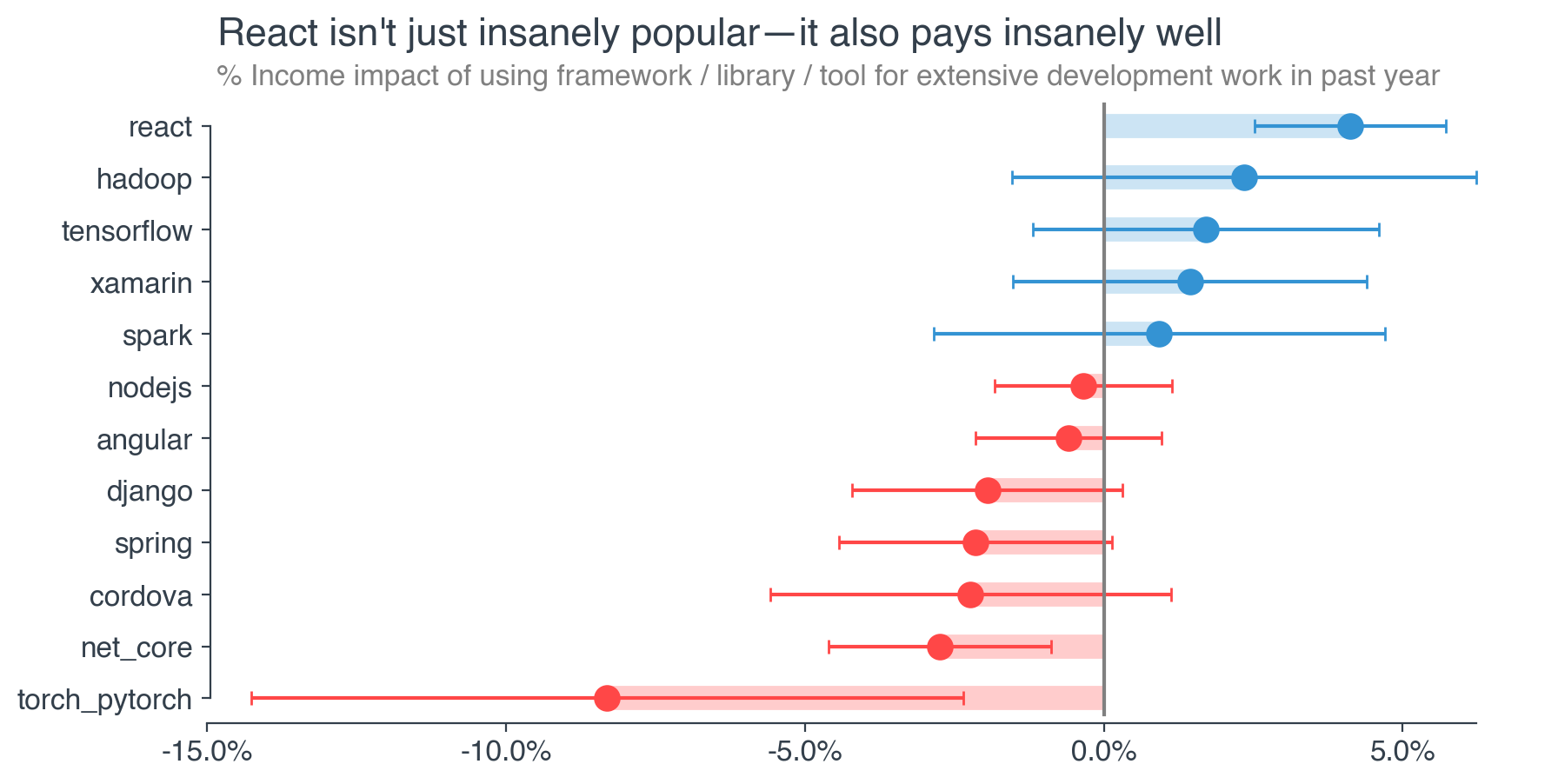

React—what needs to be said?

- The cutting-edge JavaScript framework originated and maintained by Facebook is widely and wildly popular, especially among front-end developers looking for an easy-to-use but powerful way to craft compelling user interfaces.

- React is associated with a 4.1% pay increase and is the only framework with a statistically significant positive impact.

Working with .NET Core and PyTorch negatively impacts income

- I do not have a hypothesis about .NET Core, but I do know that PyTorch was historically used much more among researchers than industry practitioners, likely driving lower pay

- Usage has since branched out as the framework has added production-grade capabilities and tooling.

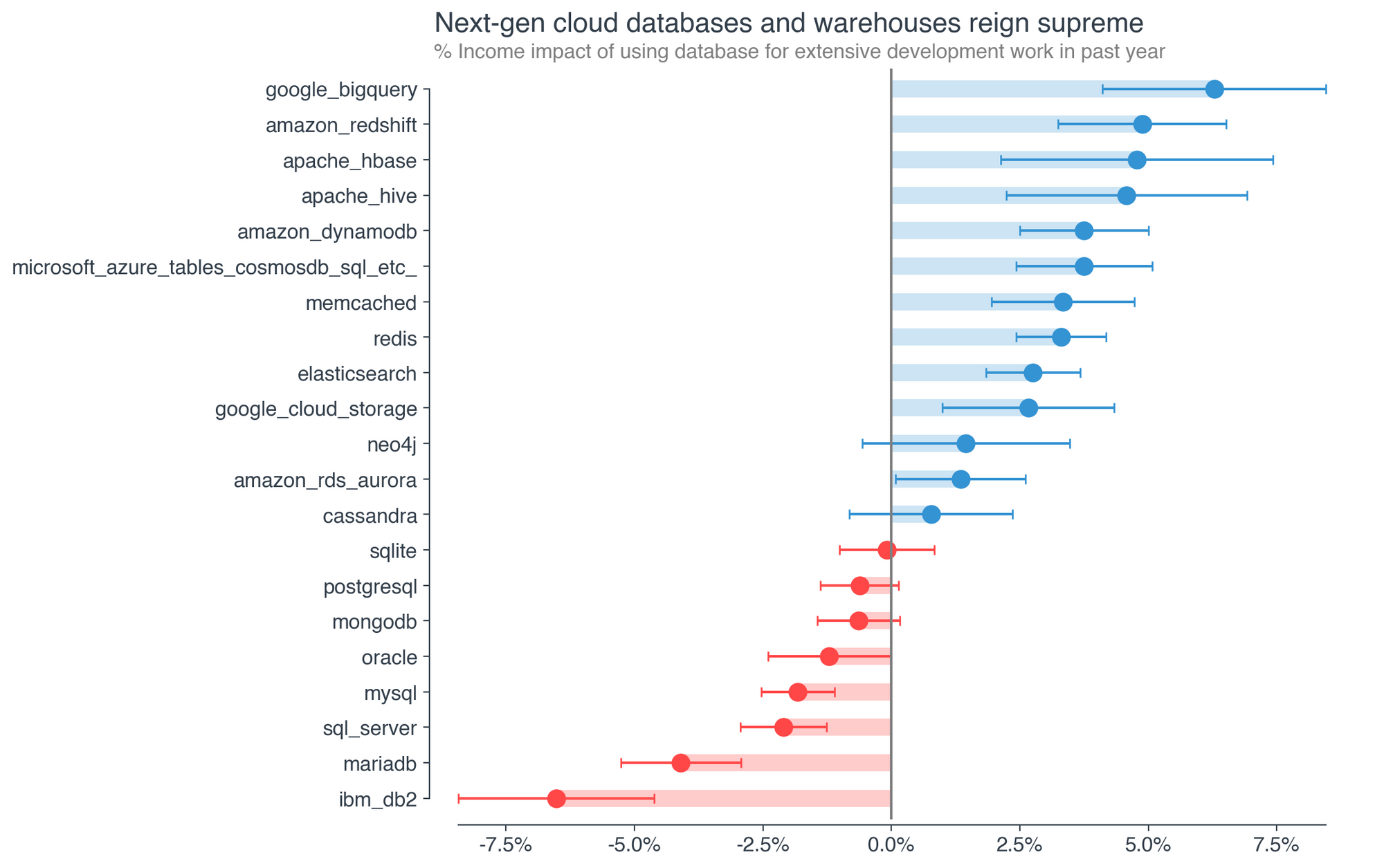

One word—cloud. Almost all of the databases with positive income effects are hosted in the cloud, often exclusively as a managed service by one of the major public cloud service providers (Google, Amazon, Facebook).

Google’s BigQuery data warehouse tops the list, followed closely by Amazon’s competing Redshift. Apache Hbase and Hive come next. Afterwards it’s Amazon’s DynamoDB and Microsoft’s Azure Tables, Cosmos DB, and SQL. The list continues with Memcached, Redis, Elasticsearch, Google Cloud Storage, and Amazon RDS and Aurora—all positive and statistically significant.

Older database technologies from legacy vendors dominate the negative end. This includes Oracle’s once ubiquitous databases, MySQL, SQL Server, MariaDB, and IBM’s Db2.

It’s fascinating to see the impact of the cloud revolution laid out in stark relief. There are meaningful income gains associated with working with next-gen cloud-enabled databases. This fact should be top of mind for developers looking to upgrade their skills and pay.

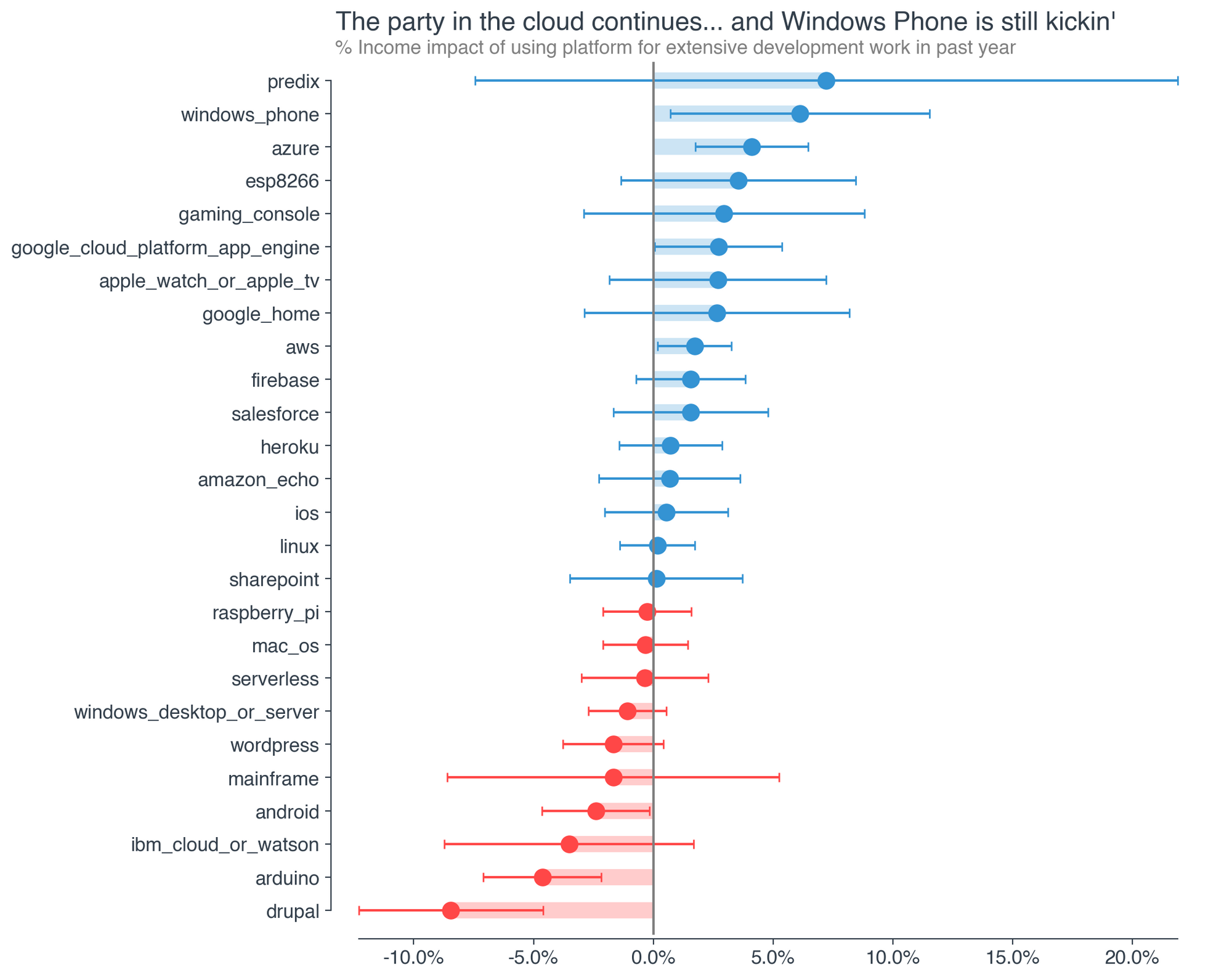

I’ll say it again—cloud. The various public clouds are the only platforms aside from Windows Phone with positive, statistically significant effects on developer earnings. Azure is associated with 4.1% higher pay, GCP 2.7%, AWS 1.7%.

And Windows Phone? Not going to try to explain that one.

Android, Arduino and Drupal had meaningful negative association with income—2.4%, 4.6%, and 8.5% respectively.

Android’s ubiquitous operating system needs no introduction, though it’s negative pay relationship might need an explanation. Unfortunately, I am stumped here.

Arduino is an open-source prototyping platform with associated hardware microcontrollers that eases development of electronic devices. Though used for serious development work, Arduino is also heavily used by engineering students as well, which likely drives the negative value.

Drupal is a PHP-based content management system whose popularity peaked in 2011 but has since been on a slow decline.

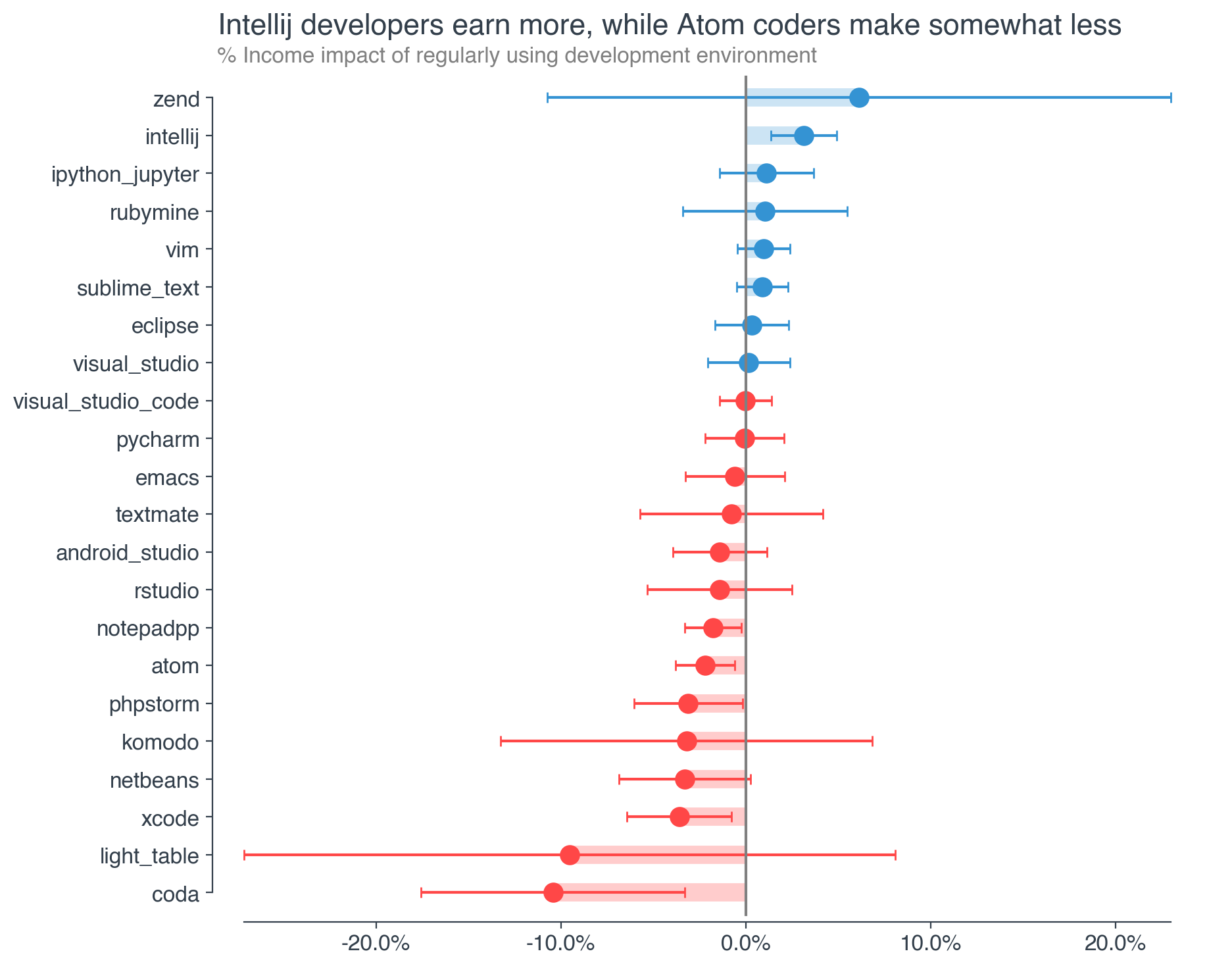

You might not think that a developer’s choice of IDE or text editors wouldn’t matter—and you’d be (largely) correct! The associated effects here are generally quite small.

However, IntelliJ stands out from the pack

- Billed by JetBrains, its creator, as the “Java IDE for professional developers”, IntelliJ users make 3.1% more than non-users

- Of course, this is not an entirely useful comparison for someone who doesn’t use Java in their development work

Notepad++ and Atom are associated with slightly lower earnings, on the order of 2%, which is statistically significant. Notably, both are popular but are generally viewed more as text editors than fully-fledged IDEs.

PhpStorm, Xcode, and Coda also had negative effects that were statistically significant.

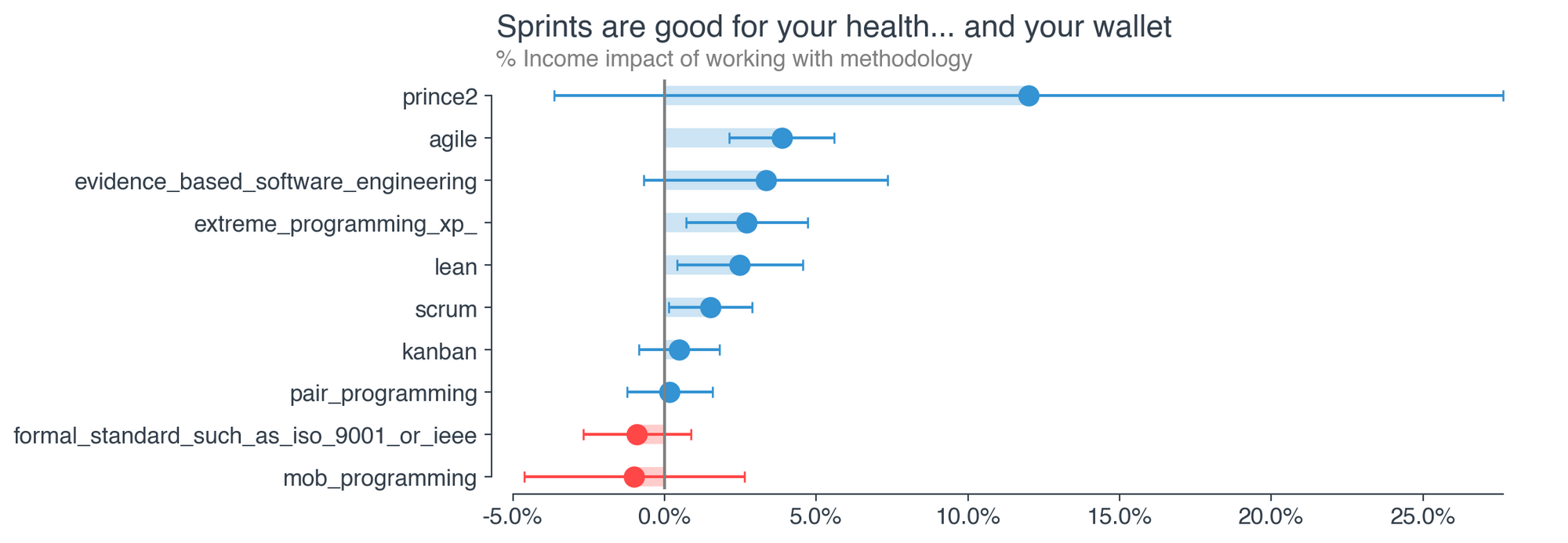

The incredibly popular Agile leads the pack among project management methodologies. Devs who use Agile in their development work earn 3.9% more than those who don’t.

The less common extreme programming methodology is associated with 2.7% higher pay.

Lean comes in at 2.5%, while Scrum developers earn 1.5% more, all else equal.

Prince2 appears to be at outlier, but this is mostly due to limited data—only 0.1% of developers in the sample use the methodology.

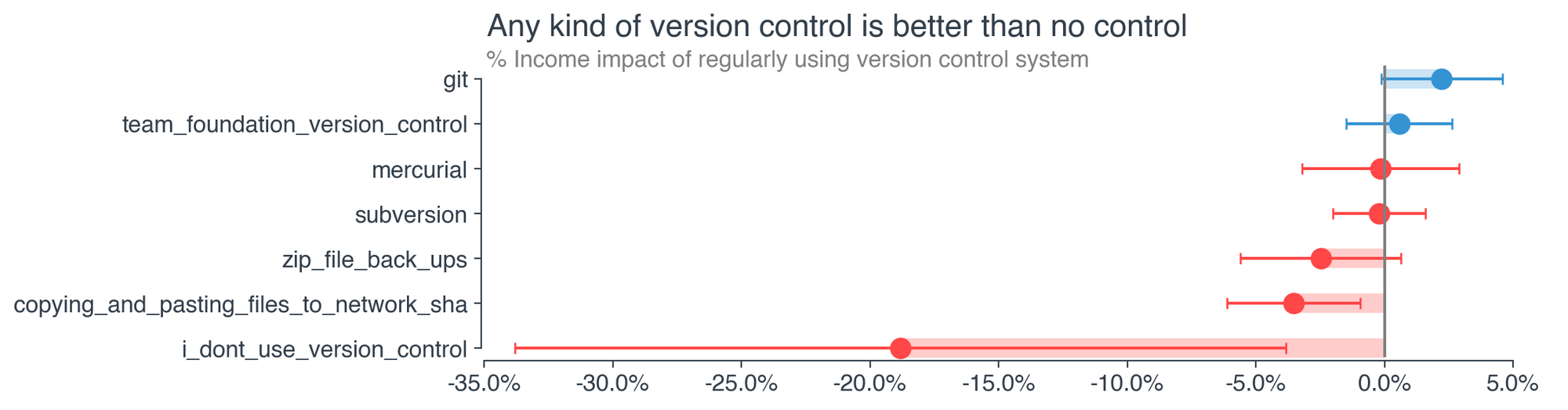

For the most part, the exact form of version control used does not matter.

The key takeaway here—any kind of version control is better than no version control, but please, please avoid copying and pasting files to a network share.

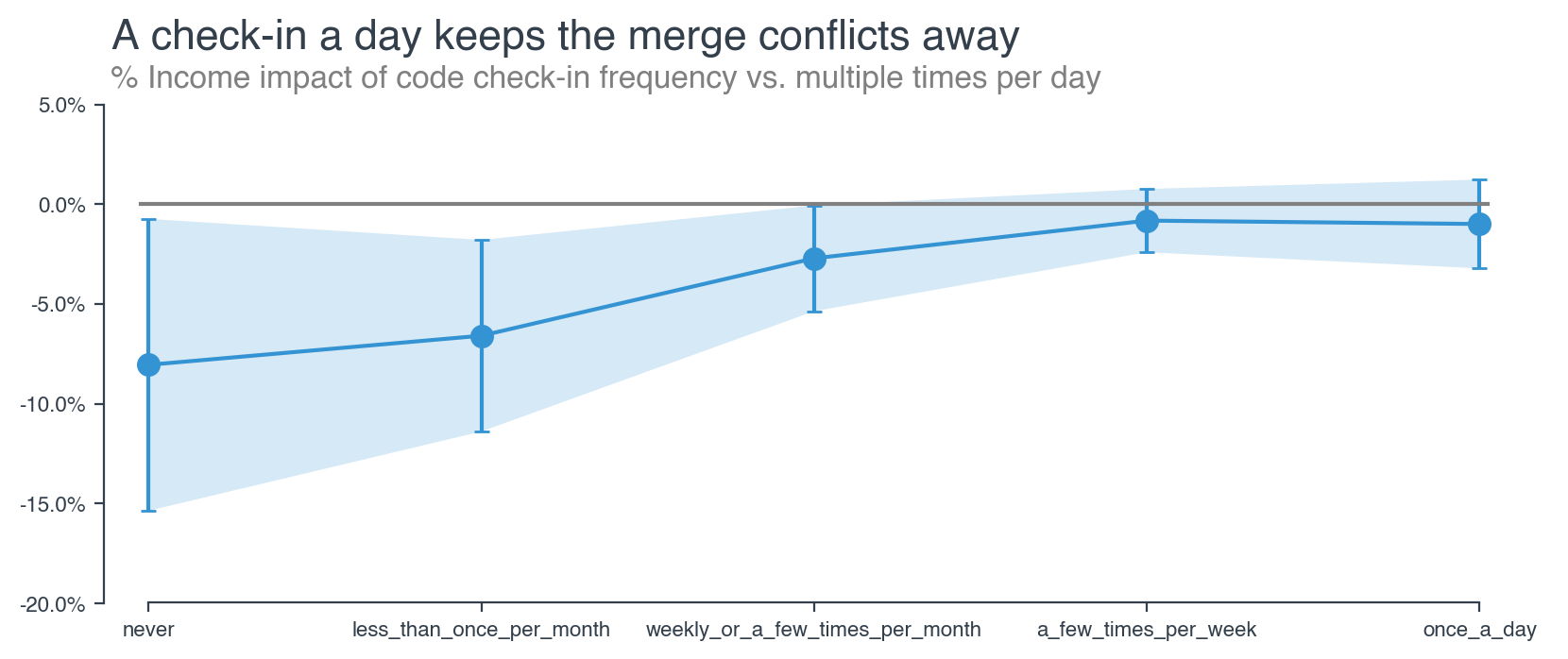

The best practice appears to be the best paid

- Checking in code multiple times per day

- It’s clearly important to check in code on some kind of cadence—at least monthly, ideally

- If you are checking in code less than once per month or not at all, your pay is likely being impacted as a result.

Not to sound like a broken record, but the reverse causation caveat applies here as well. It is completely plausible that checking in code more often increases a developers pay. It’s also possible that companies that pay more are more likely to follow and mandate development best practices, such as frequent code check-ins.

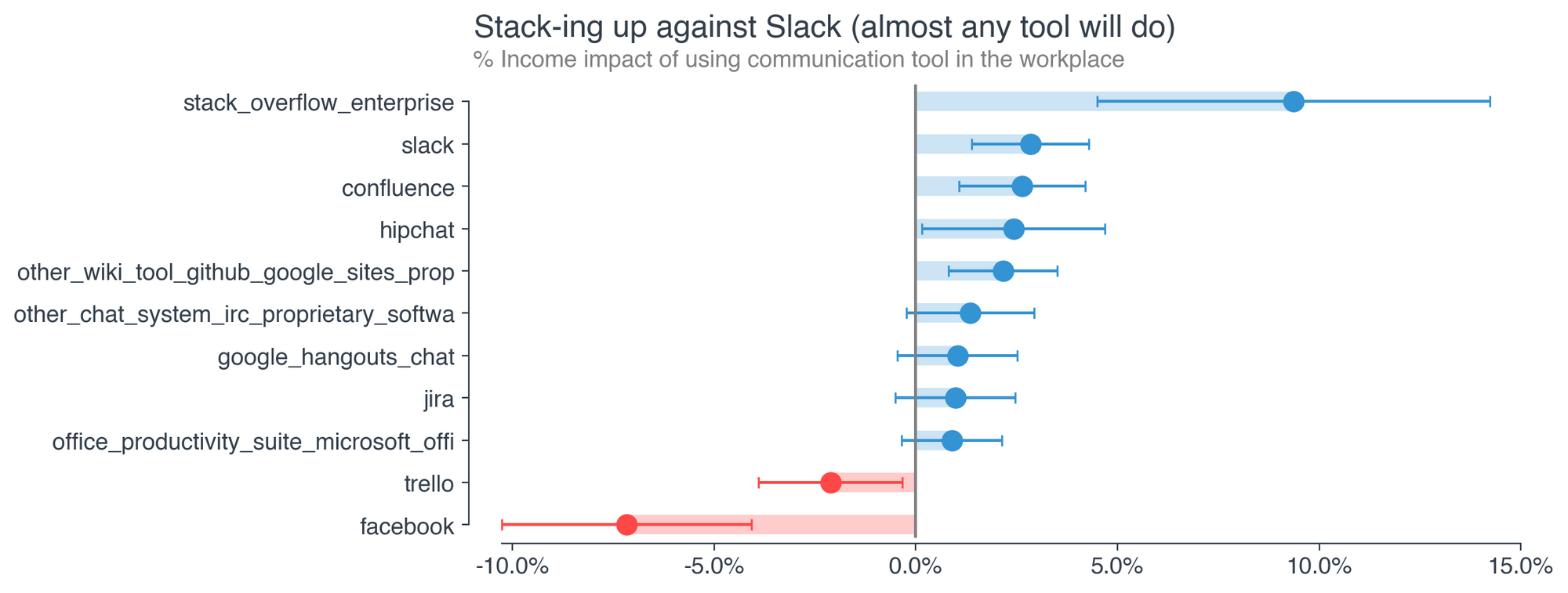

It seems too convenient that in a survey run by Stack Overflow that its own enterprise product would be associated with significantly higher developer pay, but I can only go where the data takes me. Developers using Stack Overflow Enterprise earn 9.4% more than those who don’t.

This likely picks up some effect of simply working at a company that uses Stack Overflow Enterprise, which may be higher paying on average.

Other communication tools had smaller positive impacts like the incredibly popular Slack, Atlassian’s Confluence, HipChat, and internal intranet sites (wikis, Google sites etc), which all have earnings effects in the 2-3% range.

Trello and Facebook are negative enough to be statistically significant.

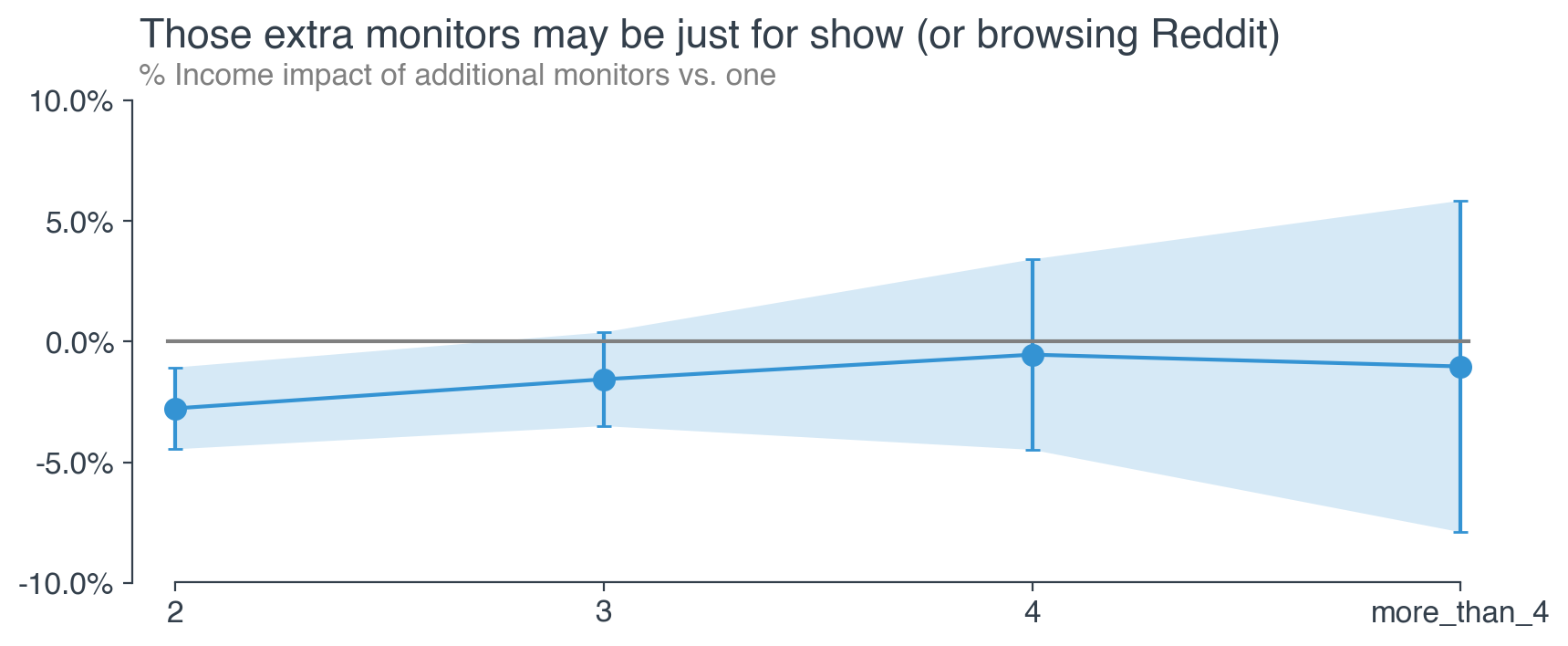

Surprisingly, developers who use two monitors earn 2.8% less than those who use only a single monitor, all else equal.

At this time, it’s not clear why more monitors would negatively impact earnings. The potential for enhanced productivity may be swamped by the temptation to use the additional screen real estate for non-work activities.

No other monitor count had a statistically significant effect on income relative to one.

Takeaways

- The tools associated with higher developer pay are quite interesting and not necessarily what one might expect

- In some cases, the most popular tools also pay the most

- In other cases, more obscure tools appear to have an advantage

- Check in your code (at least every once in a while)!

- One clear trend is the impact the move to the cloud is having on developers—the effects of the public cloud on developer pay are large and consistently statistically significant across the big 3 U.S. clouds

- Knowing how to use and leverage these next-generation computing environments and finding a job that employs those skills can drive meaningful pay improvements for the average developer

Work Life, Health, and Wellness

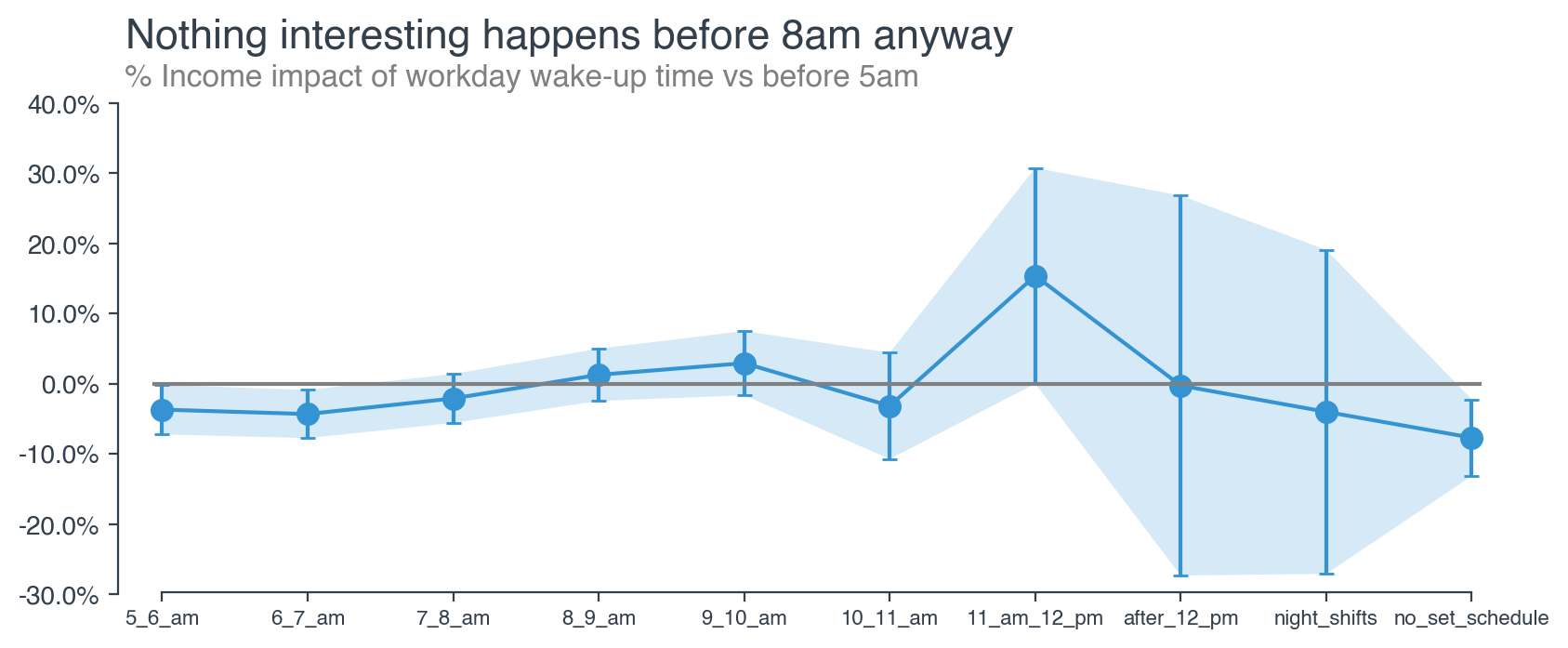

These results are admittedly difficult to interpret. There is no clear linear time trend for the impact of wake up time on earnings.

After 7am, later does appear to be somewhat better—up to a point. Strangely, developers between 11am and 12pm see 15.4% higher pay than those who are up before 5am.

The most important takeaway is this—have a set schedule. This will do more good than optimizing for a specific wake-up time. Not having a regular wake up time was associated with 7.7% lower pay for software developers.

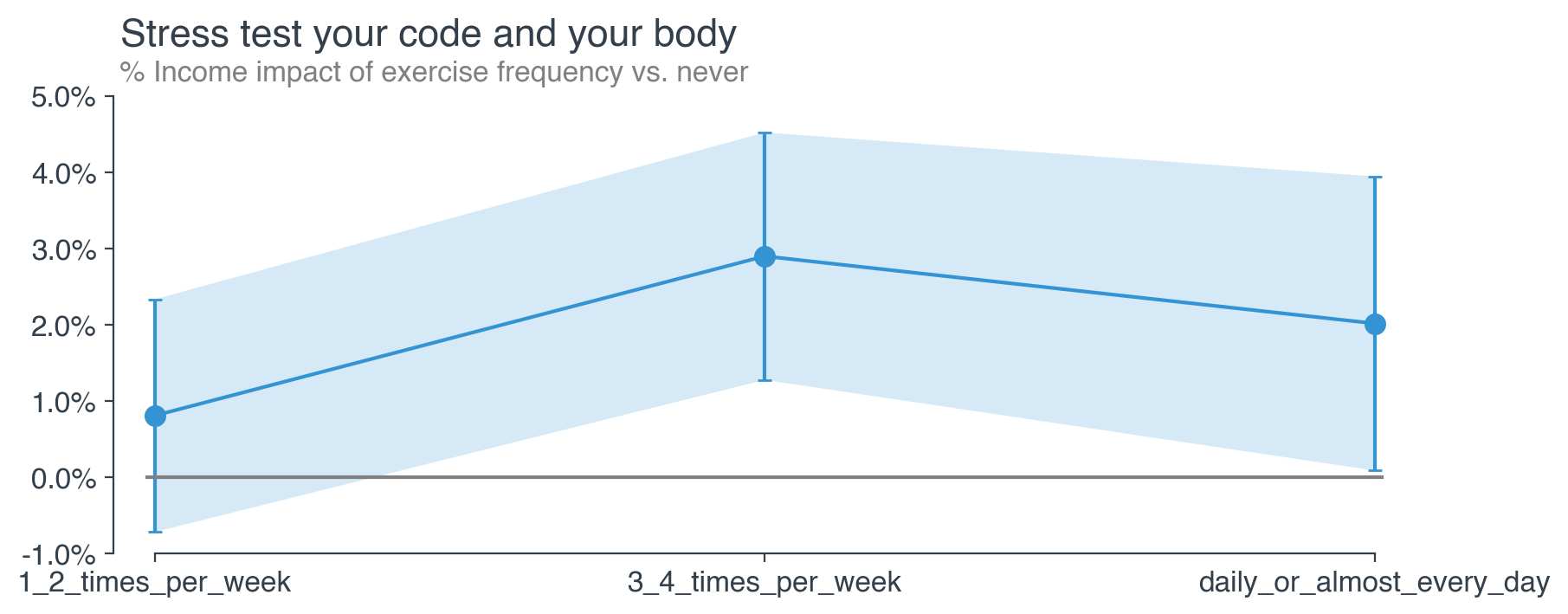

Exercise is strongly associated with earnings. While only exercising once or twice a week does not appear impactful, exercising 3 times or more per week is associated with 2-2.9% higher pay than a similar developer who does not exercise at all.

It is possible however that reverse causation could cause developers who earn more to work out more—perhaps because they have more time or can more easily afford a gym membership. Alternatively, higher paying companies often have gyms on-premises, making it easier to work out more.

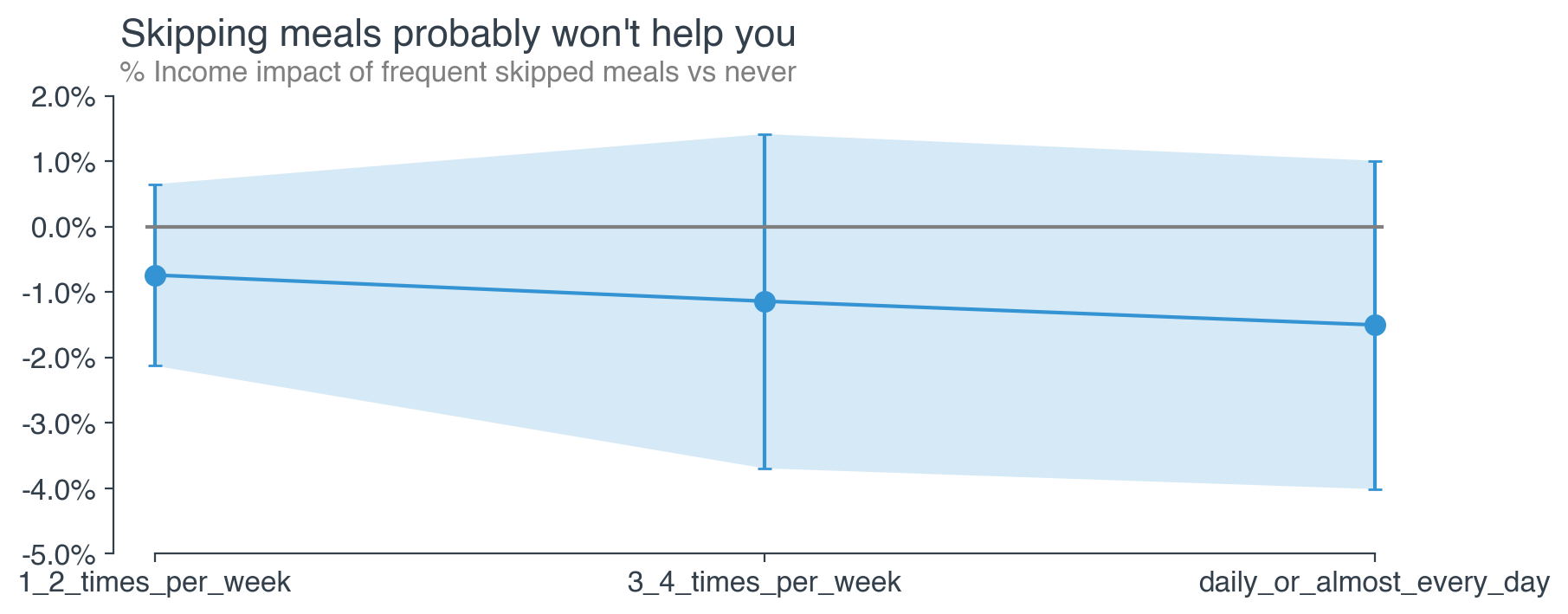

Don’t skip meals. Any amount of meal skipping was associated with lower pay, though never statistically significant. Developers are not rewarded for this unhealthy work habit.

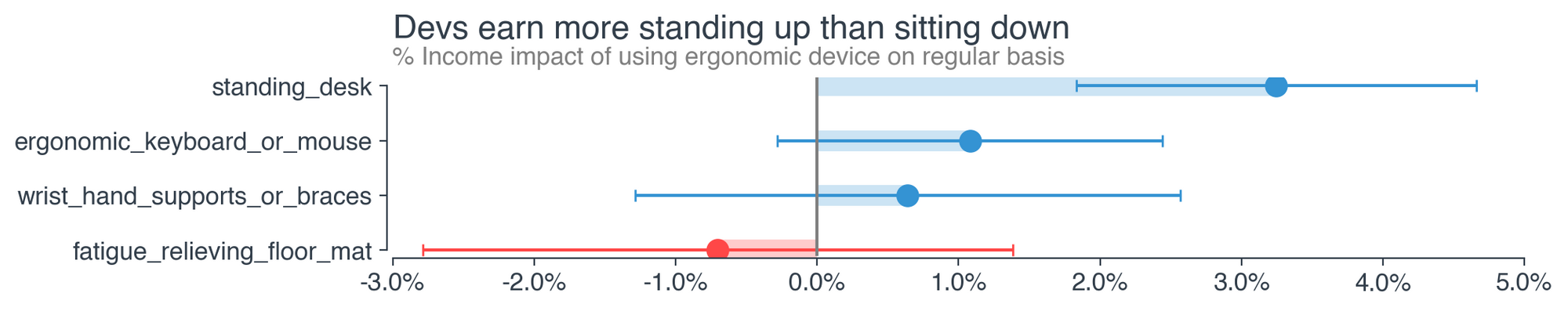

Among ergonomic devices, standing desks were associated with 3.2% higher pay, which is statistically significant.

Again, higher paying companies are potentially more like to provide employees with standing desks, so the direction of causality here is questionable.

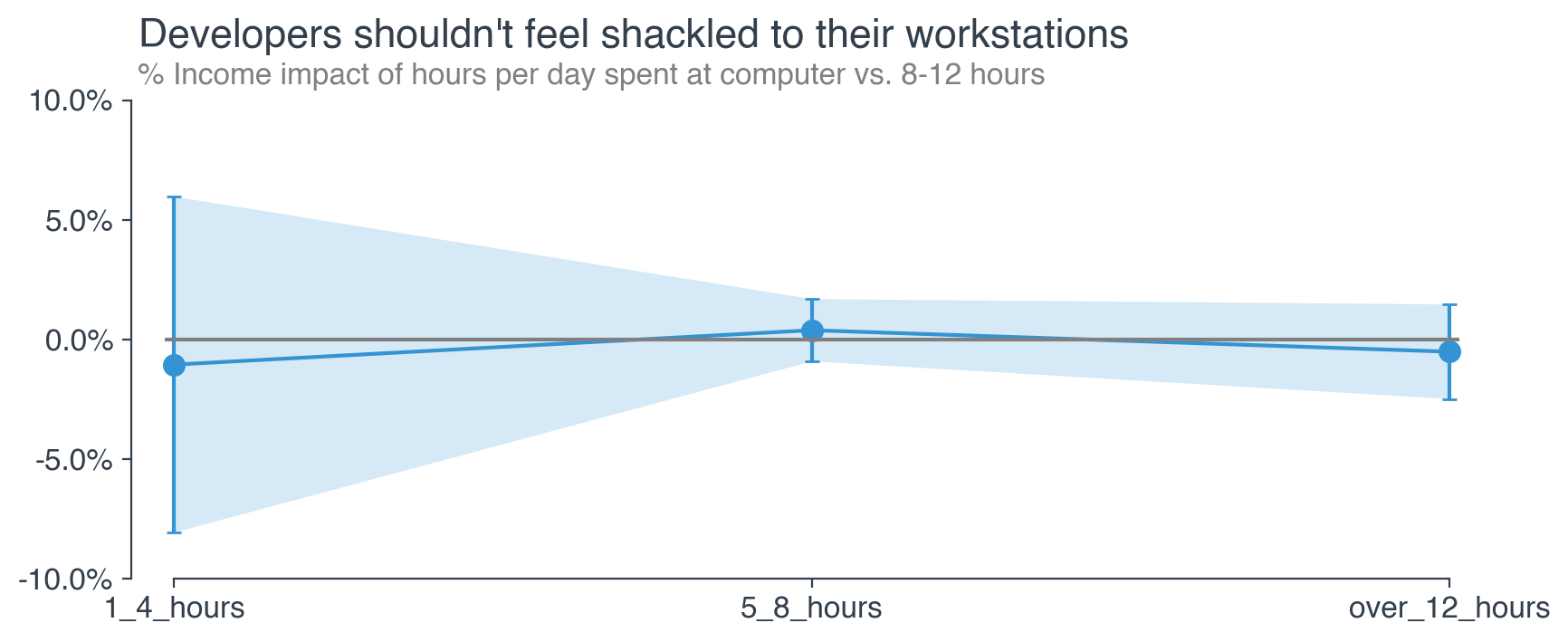

Time spent at the computer each day does not have a meaningful relationship with pay. Handcuffing yourself to your laptop is not going to earn you higher pay as a developer.

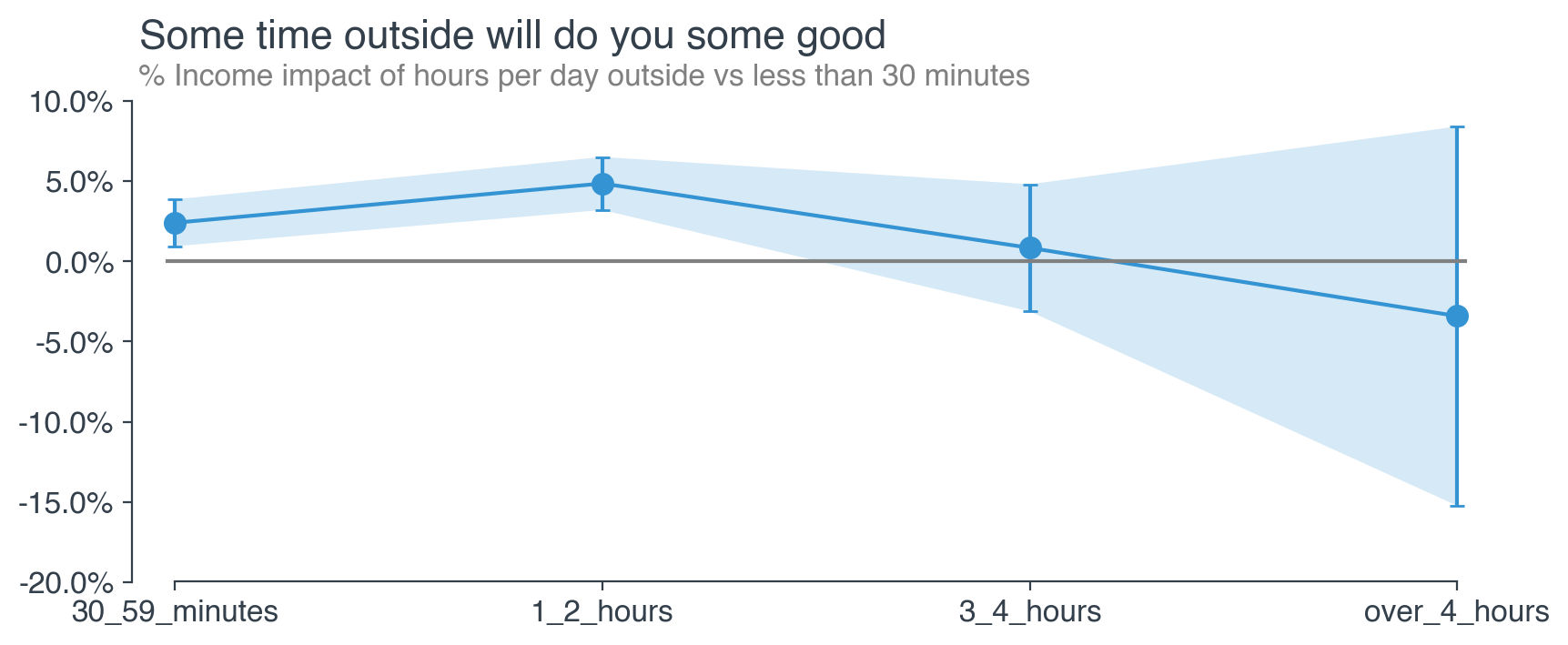

Unlike time spent at the computer, time spent outside does have an impact on pay, with the ideal amount being 1-2 hours. Spending fewer than 30 minutes outside is associated with 2.4% lower pay than 30 minutes to an hour.

Spending more than 4 hours outside was associated with lower pay, but there were not enough developers who do this regularly to generate a precise estimate.

Takeaways

- The data suggest that common best practices are often the best way to go

- Spend at least a small amount of time outside each day

- Skipping meals will only grow your bank balance to the extent you save money on lunch

- Exercising a few times per week is better than never hitting the gym

Conclusion

There are many important takeaways from the study and the charts above.

This analysis is a first attempt at exploring the various factors that affect developer pay. To that end, I hope that it is illuminating and informative.

However, as with many attempts to answer difficult questions, the analysis raises as many questions as it answers.

I am publishing the full code to reproduce this analysis because I believe open source, replicable research is the key to the robust advancement of knowledge

- I would love for this analysis to serve as starting point for others who wish to elevate the state of knowledge on this important topic

- If you find an error or disagree with some aspect of the analysis, feel free to submit edits (pull requests) to my GitLab or GitHub repositories

I care deeply about the technology industry. But solving its issues and compounding its strengths demands a rigorous understanding of its component elements. Developers are a critical piece of the tech puzzle, and they deserve our attention.

Appendix

Data

As in my last post, I leverage data from Stack Overflow’s annual software developer survey, which asks about income, in addition to many other questions of interest.

Examples include

- Which of the following best describes the highest level of formal education that you’ve completed?

- Approximately how many people are employed by the company or organization you work for?

- Which of the following programming, scripting, and markup languages have you done extensive development work in over the past year?

I’m interested in how answers to these questions affect income. While it’s impossible to completely avoid issues of reverse causality or correlation rather than causation, by using regression augmented with machine learning techniques, described here, we can have greater confidence that our results accurately represent the true relationship income.

Essentially, we’ll analyze how each possible answer affects income, holding all other answers constant.

I limit the dataset to only non-retired US respondents above the age of 18 with income between $10,000-250,000. Responses above $250K have a higher tendency to be troll (i.e. made up) responses, which I’d like to exclude, and few answers come in above this threshold regardless.

This leaves us with a dataset of approximately 11,000 developers.

Methodology

I estimate the following equation on the data:

$$\log(income) = \beta_0 + \beta_1T + \beta_2X + \epsilon$$

Where \(T\) is our trait of interest, \(\beta_1\) is the effect of that trait on income relative to the base category, \(X\) is a set of controls (in our case, the respondent's answers to other questions in the survey), \(\beta_2\) is the set of effects for each respective control, and \(\epsilon\) is the irreducible error in our estimate.

Assuming we’ve included a “complete” and “correct" set of controls, this should provide a reasonably accurate estimate of the relationship of the trait of interest, \(T\), with income. The log transformation of income means our results will be in roughly percentage terms, implying that trait \(T\) is associated with an increase in income of \(\beta_1*100\%\) relative to the “base" category, all else equal. The base category will vary by trait.

Selecting the right set of controls is non-trivial. One has any number of degrees of freedom to select a subset of controls among all those available, opening the doors to “p-hacking” and other infamous behavior, which can lead to incorrect (biased) estimates of the parameters of interest.

To avoid this, I leverage a powerful machine learning technique, Double Selection (specifically Double Lasso Selection), to do principled covariate selection for each trait \(T\), rerunning the regression for every trait. As described in Belloni, Chernozhukov, Hansen (2011), this should provide a more accurate estimate of the income effect of each trait than simply using all the covariates or attempting to manually select a subset. I won’t cover the method in detail here, but refer to the original paper for more information. This post provides a relatively intuitive explanation, and is also where I originally learned of the technique.

Long story short, Double Selection makes us much more confident that the results represent the accurate effects.

This post has been published on www.productschool.com communities